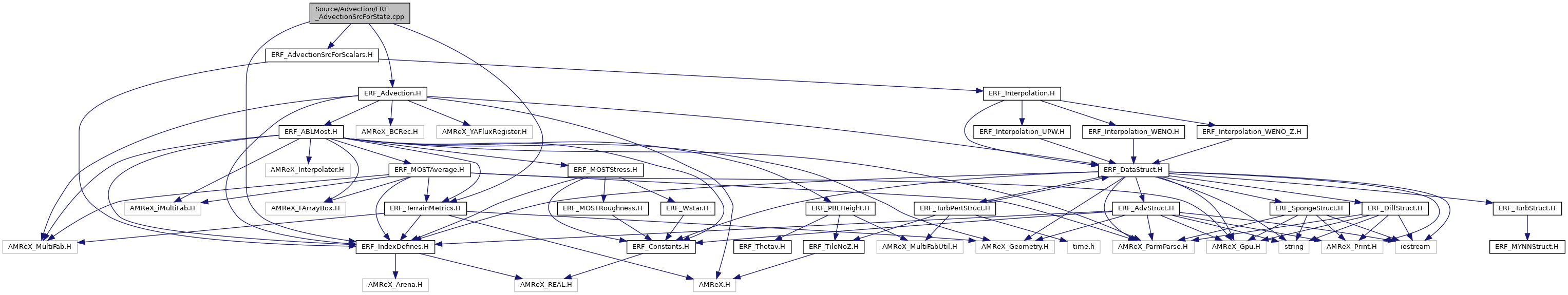

ERF_AdvectionSrcForState.cpp File Reference

#include <ERF_IndexDefines.H>#include <ERF_TerrainMetrics.H>#include <ERF_Advection.H>#include <ERF_AdvectionSrcForScalars.H>

Include dependency graph for ERF_AdvectionSrcForState.cpp:

Functions | |

| void | AdvectionSrcForRho (const Box &bx, const Array4< Real > &advectionSrc, const Array4< const Real > &rho_u, const Array4< const Real > &rho_v, const Array4< const Real > &Omega, const Array4< Real > &avg_xmom, const Array4< Real > &avg_ymom, const Array4< Real > &avg_zmom, const Array4< const Real > &ax_arr, const Array4< const Real > &ay_arr, const Array4< const Real > &az_arr, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &cellSizeInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vx, const GpuArray< const Array4< Real >, AMREX_SPACEDIM > &flx_arr, const bool fixed_rho) |

| void | AdvectionSrcForScalars (const Box &bx, const int icomp, const int ncomp, const Array4< const Real > &avg_xmom, const Array4< const Real > &avg_ymom, const Array4< const Real > &avg_zmom, const Array4< const Real > &cell_prim, const Array4< Real > &advectionSrc, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &cellSizeInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_my, const AdvType horiz_adv_type, const AdvType vert_adv_type, const Real horiz_upw_frac, const Real vert_upw_frac, const GpuArray< const Array4< Real >, AMREX_SPACEDIM > &flx_arr, const Box &domain, const BCRec *bc_ptr_h) |

Function Documentation

◆ AdvectionSrcForRho()

| void AdvectionSrcForRho | ( | const Box & | bx, |

| const Array4< Real > & | advectionSrc, | ||

| const Array4< const Real > & | rho_u, | ||

| const Array4< const Real > & | rho_v, | ||

| const Array4< const Real > & | Omega, | ||

| const Array4< Real > & | avg_xmom, | ||

| const Array4< Real > & | avg_ymom, | ||

| const Array4< Real > & | avg_zmom, | ||

| const Array4< const Real > & | ax_arr, | ||

| const Array4< const Real > & | ay_arr, | ||

| const Array4< const Real > & | az_arr, | ||

| const Array4< const Real > & | detJ, | ||

| const GpuArray< Real, AMREX_SPACEDIM > & | cellSizeInv, | ||

| const Array4< const Real > & | mf_mx, | ||

| const Array4< const Real > & | mf_my, | ||

| const Array4< const Real > & | mf_uy, | ||

| const Array4< const Real > & | mf_vx, | ||

| const GpuArray< const Array4< Real >, AMREX_SPACEDIM > & | flx_arr, | ||

| const bool | fixed_rho | ||

| ) |

Function for computing the advective tendency for the update equations for rho and (rho theta) This routine has explicit expressions for all cases (terrain or not) when the horizontal and vertical spatial orders are <= 2, and calls more specialized functions when either (or both) spatial order(s) is greater than 2.

- Parameters

-

[in] bx box over which the scalars are updated [out] advectionSrc tendency for the scalar update equation [in] rho_u x-component of momentum [in] rho_v y-component of momentum [in] Omega component of momentum normal to the z-coordinate surface [out] avg_xmom x-component of time-averaged momentum defined in this routine [out] avg_ymom y-component of time-averaged momentum defined in this routine [out] avg_zmom z-component of time-averaged momentum defined in this routine [in] detJ Jacobian of the metric transformation (= 1 if use_terrain is false) [in] cellSizeInv inverse of the mesh spacing [in] mf_m map factor at cell centers [in] mf_u map factor at x-faces [in] mf_v map factor at y-faces

void AdvectionSrcForRho(const Box &bx, const Array4< Real > &advectionSrc, const Array4< const Real > &rho_u, const Array4< const Real > &rho_v, const Array4< const Real > &Omega, const Array4< Real > &avg_xmom, const Array4< Real > &avg_ymom, const Array4< Real > &avg_zmom, const Array4< const Real > &ax_arr, const Array4< const Real > &ay_arr, const Array4< const Real > &az_arr, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &cellSizeInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vx, const GpuArray< const Array4< Real >, AMREX_SPACEDIM > &flx_arr, const bool fixed_rho)

Definition: ERF_AdvectionSrcForState.cpp:30

amrex::GpuArray< Real, AMREX_SPACEDIM > dxInv

Definition: ERF_InitCustomPertVels_ParticleTests.H:16

ParallelFor(bx, [=] AMREX_GPU_DEVICE(int i, int j, int k) noexcept { const auto prob_lo=geomdata.ProbLo();const auto dx=geomdata.CellSize();const Real x=(prob_lo[0]+(i+0.5) *dx[0])/mf_m(i, j, 0);const Real z=z_cc(i, j, k);Real L=std::sqrt(std::pow((x - x_c)/x_r, 2)+std::pow((z - z_c)/z_r, 2));if(L<=1.0) { Real dT=T_pert *(std::cos(PI *L)+1.0)/2.0;Real Tbar_hse=p_hse(i, j, k)/(R_d *r_hse(i, j, k));Real theta_perturbed=(Tbar_hse+dT) *std::pow(p_0/p_hse(i, j, k), rdOcp);Real theta_0=(Tbar_hse) *std::pow(p_0/p_hse(i, j, k), rdOcp);if(const_rho) { state_pert(i, j, k, RhoTheta_comp)=r_hse(i, j, k) *(theta_perturbed - theta_0);} else { state_pert(i, j, k, Rho_comp)=getRhoThetagivenP(p_hse(i, j, k))/theta_perturbed - r_hse(i, j, k);} } })

Here is the call graph for this function:

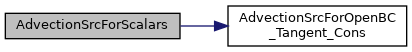

◆ AdvectionSrcForScalars()

| void AdvectionSrcForScalars | ( | const Box & | bx, |

| const int | icomp, | ||

| const int | ncomp, | ||

| const Array4< const Real > & | avg_xmom, | ||

| const Array4< const Real > & | avg_ymom, | ||

| const Array4< const Real > & | avg_zmom, | ||

| const Array4< const Real > & | cell_prim, | ||

| const Array4< Real > & | advectionSrc, | ||

| const Array4< const Real > & | detJ, | ||

| const GpuArray< Real, AMREX_SPACEDIM > & | cellSizeInv, | ||

| const Array4< const Real > & | mf_mx, | ||

| const Array4< const Real > & | mf_my, | ||

| const AdvType | horiz_adv_type, | ||

| const AdvType | vert_adv_type, | ||

| const Real | horiz_upw_frac, | ||

| const Real | vert_upw_frac, | ||

| const GpuArray< const Array4< Real >, AMREX_SPACEDIM > & | flx_arr, | ||

| const Box & | domain, | ||

| const BCRec * | bc_ptr_h | ||

| ) |

Function for computing the advective tendency for the update equations for all scalars other than rho and (rho theta) This routine has explicit expressions for all cases (terrain or not) when the horizontal and vertical spatial orders are <= 2, and calls more specialized functions when either (or both) spatial order(s) is greater than 2.

- Parameters

-

[in] bx box over which the scalars are updated if no external boundary conditions [in] icomp component of first scalar to be updated [in] ncomp number of components to be updated [in] avg_xmom x-component of time-averaged momentum defined in this routine [in] avg_ymom y-component of time-averaged momentum defined in this routine [in] avg_zmom z-component of time-averaged momentum defined in this routine [in] cell_prim primitive form of scalar variables, here only potential temperature theta [out] advectionSrc tendency for the scalar update equation [in] detJ Jacobian of the metric transformation (= 1 if use_terrain is false) [in] cellSizeInv inverse of the mesh spacing [in] mf_m map factor at cell centers [in] horiz_adv_type advection scheme to be used in horiz. directions for dry scalars [in] vert_adv_type advection scheme to be used in vert. directions for dry scalars [in] horiz_upw_frac upwinding fraction to be used in horiz. directions for dry scalars (for Blended schemes only) [in] vert_upw_frac upwinding fraction to be used in vert. directions for dry scalars (for Blended schemes only)

void AdvectionSrcForScalars(const Box &bx, const int icomp, const int ncomp, const Array4< const Real > &avg_xmom, const Array4< const Real > &avg_ymom, const Array4< const Real > &avg_zmom, const Array4< const Real > &cell_prim, const Array4< Real > &advectionSrc, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &cellSizeInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_my, const AdvType horiz_adv_type, const AdvType vert_adv_type, const Real horiz_upw_frac, const Real vert_upw_frac, const GpuArray< const Array4< Real >, AMREX_SPACEDIM > &flx_arr, const Box &domain, const BCRec *bc_ptr_h)

Definition: ERF_AdvectionSrcForState.cpp:120

void AdvectionSrcForOpenBC_Tangent_Cons(const amrex::Box &bx, const int &dir, const int &icomp, const int &ncomp, const amrex::Array4< amrex::Real > &cell_rhs, const amrex::Array4< const amrex::Real > &cell_prim, const amrex::Array4< const amrex::Real > &avg_xmom, const amrex::Array4< const amrex::Real > &avg_ymom, const amrex::Array4< const amrex::Real > &avg_zmom, const amrex::Array4< const amrex::Real > &detJ, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > &cellSizeInv, const bool do_lo=false)

@ Weno_7

@ Upwind_3rd

@ Upwind_3rd_SL

@ Weno_7Z

@ Centered_4th

@ Upwind_5th

@ Centered_6th

@ Weno_3Z

@ Weno_3

@ Centered_2nd

@ Weno_5Z

@ Weno_5

@ Weno_3MZQ

Here is the call graph for this function: