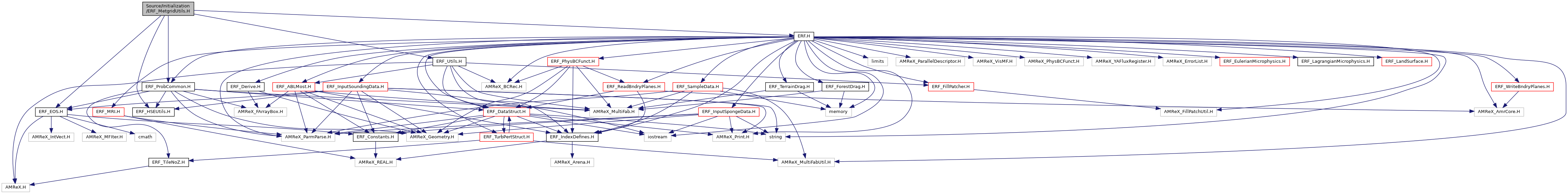

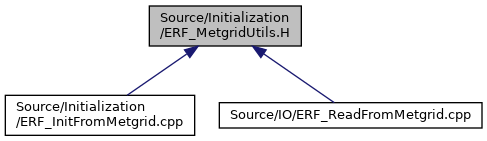

ERF_MetgridUtils.H File Reference

#include <ERF.H>#include <ERF_EOS.H>#include <ERF_Utils.H>#include <ERF_ProbCommon.H>#include <ERF_HSEUtils.H>

Include dependency graph for ERF_MetgridUtils.H:

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Functions | |

| void | read_from_metgrid (int lev, const amrex::Box &domain, const std::string &fname, std::string &NC_dateTime, amrex::Real &NC_epochTime, int &flag_psfc, int &flag_msf, int &flag_sst, int &flag_tsk, int &flag_lmask, int &NC_nx, int &NC_ny, amrex::Real &NC_dx, amrex::Real &NC_dy, amrex::FArrayBox &NC_xvel_fab, amrex::FArrayBox &NC_yvel_fab, amrex::FArrayBox &NC_temp_fab, amrex::FArrayBox &NC_rhum_fab, amrex::FArrayBox &NC_pres_fab, amrex::FArrayBox &NC_ght_fab, amrex::FArrayBox &NC_hgt_fab, amrex::FArrayBox &NC_psfc_fab, amrex::FArrayBox &NC_msfu_fab, amrex::FArrayBox &NC_msfv_fab, amrex::FArrayBox &NC_msfm_fab, amrex::FArrayBox &NC_sst_fab, amrex::FArrayBox &NC_tsk_fab, amrex::FArrayBox &NC_LAT_fab, amrex::FArrayBox &NC_LON_fab, amrex::IArrayBox &NC_lmask_iab, amrex::Geometry &geom) |

| void | init_terrain_from_metgrid (amrex::FArrayBox &z_phys_nd_fab, const amrex::Vector< amrex::FArrayBox > &NC_hgt_fab) |

| void | init_state_from_metgrid (const bool use_moisture, const bool interp_theta, const bool metgrid_debug_quiescent, const bool metgrid_debug_isothermal, const bool metgrid_debug_dry, const bool metgrid_basic_linear, const bool metgrid_use_below_sfc, const bool metgrid_use_sfc, const bool metgrid_retain_sfc, const amrex::Real metgrid_proximity, const int metgrid_order, const int metgrid_metgrid_force_sfc_k, const amrex::Real l_rdOcp, amrex::Box &tbxc, amrex::Box &tbxu, amrex::Box &tbxv, amrex::FArrayBox &state_fab, amrex::FArrayBox &x_vel_fab, amrex::FArrayBox &y_vel_fab, amrex::FArrayBox &z_vel_fab, amrex::FArrayBox &z_phys_cc_fab, const amrex::Vector< amrex::FArrayBox > &NC_hgt_fab, const amrex::Vector< amrex::FArrayBox > &NC_ght_fab, const amrex::Vector< amrex::FArrayBox > &NC_xvel_fab, const amrex::Vector< amrex::FArrayBox > &NC_yvel_fab, const amrex::Vector< amrex::FArrayBox > &NC_temp_fab, const amrex::Vector< amrex::FArrayBox > &NC_rhum_fab, const amrex::Vector< amrex::FArrayBox > &NC_pres_fab, amrex::FArrayBox &p_interp_fab, amrex::FArrayBox &t_interp_fab, amrex::FArrayBox &theta_fab, amrex::FArrayBox &mxrat_fab, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &fabs_for_bcs_xlo, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &fabs_for_bcs_xhi, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &fabs_for_bcs_ylo, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &fabs_for_bcs_yhi, const amrex::Array4< const int > &mask_c_arr, const amrex::Array4< const int > &mask_u_arr, const amrex::Array4< const int > &mask_v_arr) |

| void | init_msfs_from_metgrid (const bool metgrid_debug_msf, amrex::FArrayBox &msfu_fab, amrex::FArrayBox &msfv_fab, amrex::FArrayBox &msfm_fab, const int &flag_msf, const amrex::Vector< amrex::FArrayBox > &NC_MSFU_fab, const amrex::Vector< amrex::FArrayBox > &NC_MSFV_fab, const amrex::Vector< amrex::FArrayBox > &NC_MSFM_fab) |

| void | init_base_state_from_metgrid (const bool use_moisture, const bool metgrid_debug_psfc, const amrex::Real l_rdOcp, const amrex::Box &valid_bx, const amrex::Vector< int > &flag_psfc, amrex::FArrayBox &state, amrex::FArrayBox &r_hse_fab, amrex::FArrayBox &p_hse_fab, amrex::FArrayBox &pi_hse_fab, amrex::FArrayBox &th_hse_fab, amrex::FArrayBox &qv_hse_fab, amrex::FArrayBox &z_phys_cc_fab, const amrex::Vector< amrex::FArrayBox > &NC_psfc_fab) |

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void | lagrange_interp (const int &order, amrex::Real *x, amrex::Real *y, amrex::Real &new_x, amrex::Real &new_y) |

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void | lagrange_setup (char var_type, const bool &exp_interp, const int &orig_n, const int &new_n, const int &order, const int &i, const int &j, amrex::Real *orig_x_z, amrex::Real *orig_x_p, amrex::Real *orig_y, amrex::Real *new_x_z, amrex::Real *new_x_p, amrex::Real *new_y) |

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void | calc_p_isothermal (const amrex::Real &z, amrex::Real &p) |

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void | interpolate_column_metgrid (const bool &metgrid_use_below_sfc, const bool &metgrid_use_sfc, const bool &exp_interp, const bool &metgrid_retain_sfc, const amrex::Real &metgrid_proximity, const int &metgrid_order, const int &metgrid_force_sfc_k, const int &i, const int &j, const int &kmax, const int &src_comp, const int &itime, char var_type, char stag, const amrex::Array4< amrex::Real const > &orig_z_full, const amrex::Array4< amrex::Real const > &orig_data, const amrex::Array4< amrex::Real const > &new_z_full, const amrex::Array4< amrex::Real > &new_data_full, const bool &update_bc_data, const amrex::Array4< amrex::Real > &bc_data_xlo, const amrex::Array4< amrex::Real > &bc_data_xhi, const amrex::Array4< amrex::Real > &bc_data_ylo, const amrex::Array4< amrex::Real > &bc_data_yhi, const amrex::Box &bx_xlo, const amrex::Box &bx_xhi, const amrex::Box &bx_ylo, const amrex::Box &bx_yhi, const amrex::Array4< const int > &mask) |

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE amrex::Real | interpolate_column_metgrid_linear (const int &i, const int &j, const int &k, char stag, int src_comp, const amrex::Array4< amrex::Real const > &orig_z, const amrex::Array4< amrex::Real const > &orig_data, const amrex::Array4< amrex::Real const > &new_z) |

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void | rh_to_mxrat (int i, int j, int k, const amrex::Array4< amrex::Real const > &rhum, const amrex::Array4< amrex::Real const > &temp, const amrex::Array4< amrex::Real const > &pres, const amrex::Array4< amrex::Real > &mxrat) |

Function Documentation

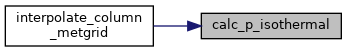

◆ calc_p_isothermal()

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void calc_p_isothermal | ( | const amrex::Real & | z, |

| amrex::Real & | p | ||

| ) |

◆ init_base_state_from_metgrid()

| void init_base_state_from_metgrid | ( | const bool | use_moisture, |

| const bool | metgrid_debug_psfc, | ||

| const amrex::Real | l_rdOcp, | ||

| const amrex::Box & | valid_bx, | ||

| const amrex::Vector< int > & | flag_psfc, | ||

| amrex::FArrayBox & | state, | ||

| amrex::FArrayBox & | r_hse_fab, | ||

| amrex::FArrayBox & | p_hse_fab, | ||

| amrex::FArrayBox & | pi_hse_fab, | ||

| amrex::FArrayBox & | th_hse_fab, | ||

| amrex::FArrayBox & | qv_hse_fab, | ||

| amrex::FArrayBox & | z_phys_cc_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_psfc_fab | ||

| ) |

◆ init_msfs_from_metgrid()

| void init_msfs_from_metgrid | ( | const bool | metgrid_debug_msf, |

| amrex::FArrayBox & | msfu_fab, | ||

| amrex::FArrayBox & | msfv_fab, | ||

| amrex::FArrayBox & | msfm_fab, | ||

| const int & | flag_msf, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_MSFU_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_MSFV_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_MSFM_fab | ||

| ) |

◆ init_state_from_metgrid()

| void init_state_from_metgrid | ( | const bool | use_moisture, |

| const bool | interp_theta, | ||

| const bool | metgrid_debug_quiescent, | ||

| const bool | metgrid_debug_isothermal, | ||

| const bool | metgrid_debug_dry, | ||

| const bool | metgrid_basic_linear, | ||

| const bool | metgrid_use_below_sfc, | ||

| const bool | metgrid_use_sfc, | ||

| const bool | metgrid_retain_sfc, | ||

| const amrex::Real | metgrid_proximity, | ||

| const int | metgrid_order, | ||

| const int | metgrid_metgrid_force_sfc_k, | ||

| const amrex::Real | l_rdOcp, | ||

| amrex::Box & | tbxc, | ||

| amrex::Box & | tbxu, | ||

| amrex::Box & | tbxv, | ||

| amrex::FArrayBox & | state_fab, | ||

| amrex::FArrayBox & | x_vel_fab, | ||

| amrex::FArrayBox & | y_vel_fab, | ||

| amrex::FArrayBox & | z_vel_fab, | ||

| amrex::FArrayBox & | z_phys_cc_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_hgt_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_ght_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_xvel_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_yvel_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_temp_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_rhum_fab, | ||

| const amrex::Vector< amrex::FArrayBox > & | NC_pres_fab, | ||

| amrex::FArrayBox & | p_interp_fab, | ||

| amrex::FArrayBox & | t_interp_fab, | ||

| amrex::FArrayBox & | theta_fab, | ||

| amrex::FArrayBox & | mxrat_fab, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | fabs_for_bcs_xlo, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | fabs_for_bcs_xhi, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | fabs_for_bcs_ylo, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | fabs_for_bcs_yhi, | ||

| const amrex::Array4< const int > & | mask_c_arr, | ||

| const amrex::Array4< const int > & | mask_u_arr, | ||

| const amrex::Array4< const int > & | mask_v_arr | ||

| ) |

◆ init_terrain_from_metgrid()

| void init_terrain_from_metgrid | ( | amrex::FArrayBox & | z_phys_nd_fab, |

| const amrex::Vector< amrex::FArrayBox > & | NC_hgt_fab | ||

| ) |

◆ interpolate_column_metgrid()

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void interpolate_column_metgrid | ( | const bool & | metgrid_use_below_sfc, |

| const bool & | metgrid_use_sfc, | ||

| const bool & | exp_interp, | ||

| const bool & | metgrid_retain_sfc, | ||

| const amrex::Real & | metgrid_proximity, | ||

| const int & | metgrid_order, | ||

| const int & | metgrid_force_sfc_k, | ||

| const int & | i, | ||

| const int & | j, | ||

| const int & | kmax, | ||

| const int & | src_comp, | ||

| const int & | itime, | ||

| char | var_type, | ||

| char | stag, | ||

| const amrex::Array4< amrex::Real const > & | orig_z_full, | ||

| const amrex::Array4< amrex::Real const > & | orig_data, | ||

| const amrex::Array4< amrex::Real const > & | new_z_full, | ||

| const amrex::Array4< amrex::Real > & | new_data_full, | ||

| const bool & | update_bc_data, | ||

| const amrex::Array4< amrex::Real > & | bc_data_xlo, | ||

| const amrex::Array4< amrex::Real > & | bc_data_xhi, | ||

| const amrex::Array4< amrex::Real > & | bc_data_ylo, | ||

| const amrex::Array4< amrex::Real > & | bc_data_yhi, | ||

| const amrex::Box & | bx_xlo, | ||

| const amrex::Box & | bx_xhi, | ||

| const amrex::Box & | bx_ylo, | ||

| const amrex::Box & | bx_yhi, | ||

| const amrex::Array4< const int > & | mask | ||

| ) |

508 new_z_p[k] = 0.25*(new_z_full(i,j,k)+new_z_full(i,j+1,k)+new_z_full(i,j,k+1)+new_z_full(i,j+1,k+1));

510 new_z_p[k] = 0.25*(new_z_full(i,j,k)+new_z_full(i+1,j,k)+new_z_full(i,j,k+1)+new_z_full(i+1,j,k+1));

512 new_z_p[k] = 0.125*(new_z_full(i,j,k )+new_z_full(i,j+1,k )+new_z_full(i+1,j,k )+new_z_full(i+1,j+1,k )+

545 if (flip_data_required) amrex::Abort("metgrid initialization flip_data_required. Not yet implemented.");

762 if (mask(i,j,k) && update_bc_data && bx_xlo.contains(i,j,k)) bc_data_xlo(i,j,k,0) = new_data[k];

763 if (mask(i,j,k) && update_bc_data && bx_xhi.contains(i,j,k)) bc_data_xhi(i,j,k,0) = new_data[k];

764 if (mask(i,j,k) && update_bc_data && bx_ylo.contains(i,j,k)) bc_data_ylo(i,j,k,0) = new_data[k];

765 if (mask(i,j,k) && update_bc_data && bx_yhi.contains(i,j,k)) bc_data_yhi(i,j,k,0) = new_data[k];

AMREX_FORCE_INLINE AMREX_GPU_DEVICE void calc_p_isothermal(const amrex::Real &z, amrex::Real &p)

Definition: ERF_MetgridUtils.H:450

AMREX_FORCE_INLINE AMREX_GPU_DEVICE void lagrange_setup(char var_type, const bool &exp_interp, const int &orig_n, const int &new_n, const int &order, const int &i, const int &j, amrex::Real *orig_x_z, amrex::Real *orig_x_p, amrex::Real *orig_y, amrex::Real *new_x_z, amrex::Real *new_x_p, amrex::Real *new_y)

Definition: ERF_MetgridUtils.H:146

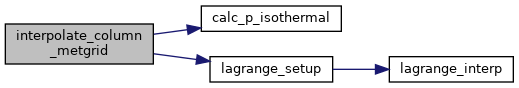

Here is the call graph for this function:

◆ interpolate_column_metgrid_linear()

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE amrex::Real interpolate_column_metgrid_linear | ( | const int & | i, |

| const int & | j, | ||

| const int & | k, | ||

| char | stag, | ||

| int | src_comp, | ||

| const amrex::Array4< amrex::Real const > & | orig_z, | ||

| const amrex::Array4< amrex::Real const > & | orig_data, | ||

| const amrex::Array4< amrex::Real const > & | new_z | ||

| ) |

@ z

◆ lagrange_interp()

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void lagrange_interp | ( | const int & | order, |

| amrex::Real * | x, | ||

| amrex::Real * | y, | ||

| amrex::Real & | new_x, | ||

| amrex::Real & | new_y | ||

| ) |

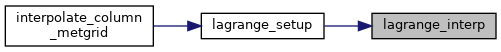

◆ lagrange_setup()

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void lagrange_setup | ( | char | var_type, |

| const bool & | exp_interp, | ||

| const int & | orig_n, | ||

| const int & | new_n, | ||

| const int & | order, | ||

| const int & | i, | ||

| const int & | j, | ||

| amrex::Real * | orig_x_z, | ||

| amrex::Real * | orig_x_p, | ||

| amrex::Real * | orig_y, | ||

| amrex::Real * | new_x_z, | ||

| amrex::Real * | new_x_p, | ||

| amrex::Real * | new_y | ||

| ) |

215 if (debug) amrex::Print() << " (1a) order=" << order << " new_x_z=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

248 if (debug) amrex::Print() << " (1b) order=" << order << " new_x=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

285 if (debug) amrex::Print() << " (2a) order=" << order << " new_x_z=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

317 if (debug) amrex::Print() << "new_k=" << new_k << " (2b) order=" << order << " new_x_z=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

350 if (debug) amrex::Print() << " (3) order=" << order << " new_x_z=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

381 if (debug) amrex::Print() << " (4) order=" << order << " new_x_z=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

413 if (debug) amrex::Print() << " (5) order=" << order << " new_x=" << new_x_z[new_k] << " new_x_p=" << new_x_p[new_k] << " kl=" << kl << " kr=" << kr << " ksta=" << ksta << " kend=" << kend << std::endl;

AMREX_FORCE_INLINE AMREX_GPU_DEVICE void lagrange_interp(const int &order, amrex::Real *x, amrex::Real *y, amrex::Real &new_x, amrex::Real &new_y)

Definition: ERF_MetgridUtils.H:116

Referenced by interpolate_column_metgrid().

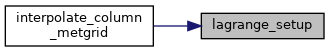

Here is the call graph for this function:

Here is the caller graph for this function:

◆ read_from_metgrid()

| void read_from_metgrid | ( | int | lev, |

| const amrex::Box & | domain, | ||

| const std::string & | fname, | ||

| std::string & | NC_dateTime, | ||

| amrex::Real & | NC_epochTime, | ||

| int & | flag_psfc, | ||

| int & | flag_msf, | ||

| int & | flag_sst, | ||

| int & | flag_tsk, | ||

| int & | flag_lmask, | ||

| int & | NC_nx, | ||

| int & | NC_ny, | ||

| amrex::Real & | NC_dx, | ||

| amrex::Real & | NC_dy, | ||

| amrex::FArrayBox & | NC_xvel_fab, | ||

| amrex::FArrayBox & | NC_yvel_fab, | ||

| amrex::FArrayBox & | NC_temp_fab, | ||

| amrex::FArrayBox & | NC_rhum_fab, | ||

| amrex::FArrayBox & | NC_pres_fab, | ||

| amrex::FArrayBox & | NC_ght_fab, | ||

| amrex::FArrayBox & | NC_hgt_fab, | ||

| amrex::FArrayBox & | NC_psfc_fab, | ||

| amrex::FArrayBox & | NC_msfu_fab, | ||

| amrex::FArrayBox & | NC_msfv_fab, | ||

| amrex::FArrayBox & | NC_msfm_fab, | ||

| amrex::FArrayBox & | NC_sst_fab, | ||

| amrex::FArrayBox & | NC_tsk_fab, | ||

| amrex::FArrayBox & | NC_LAT_fab, | ||

| amrex::FArrayBox & | NC_LON_fab, | ||

| amrex::IArrayBox & | NC_lmask_iab, | ||

| amrex::Geometry & | geom | ||

| ) |

◆ rh_to_mxrat()

| AMREX_FORCE_INLINE AMREX_GPU_DEVICE void rh_to_mxrat | ( | int | i, |

| int | j, | ||

| int | k, | ||

| const amrex::Array4< amrex::Real const > & | rhum, | ||

| const amrex::Array4< amrex::Real const > & | temp, | ||

| const amrex::Array4< amrex::Real const > & | pres, | ||

| const amrex::Array4< amrex::Real > & | mxrat | ||

| ) |

real(c_double), parameter svp1

Definition: ERF_module_model_constants.F90:78

real(c_double), parameter svp3

Definition: ERF_module_model_constants.F90:80

real(c_double), parameter svp2

Definition: ERF_module_model_constants.F90:79

real(c_double), parameter svpt0

Definition: ERF_module_model_constants.F90:81