ERF_NCWpsFile.H File Reference

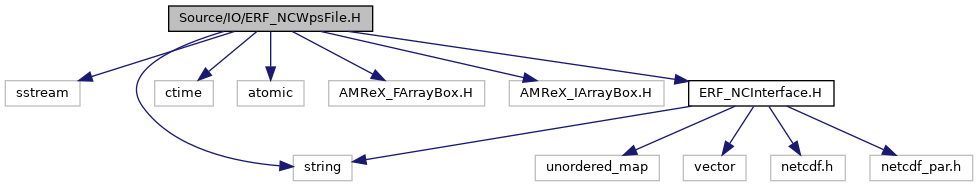

#include <sstream>#include <string>#include <atomic>#include "AMReX_FArrayBox.H"#include "AMReX_IArrayBox.H"#include "ERF_EpochTime.H"#include "ERF_NCInterface.H"

Include dependency graph for ERF_NCWpsFile.H:

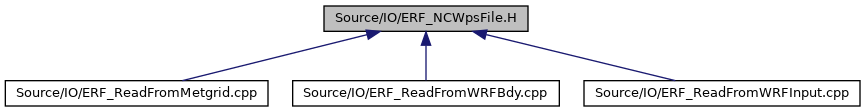

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Classes | |

| struct | NDArray< DataType > |

Typedefs | |

| using | PlaneVector = amrex::Vector< amrex::FArrayBox > |

Enumerations | |

| enum class | NC_Data_Dims_Type { Time_SL_SN_WE , Time_BT_SN_WE , Time_SN_WE , Time_BT , Time_SL , Time , Time_BdyWidth_BT_SN , Time_BdyWidth_BT_WE , Time_BdyWidth_SN , Time_BdyWidth_WE } |

Functions | |

| int | BuildFABsFromWRFBdyFile (const std::string &fname, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &bdy_data_xlo, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &bdy_data_xhi, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &bdy_data_ylo, amrex::Vector< amrex::Vector< amrex::FArrayBox >> &bdy_data_yhi) |

| template<typename DType > | |

| void | ReadTimeSliceFromNetCDFFile (const std::string &fname, const int tidx, amrex::Vector< std::string > names, amrex::Vector< NDArray< DType > > &arrays, amrex::Vector< int > &success) |

| template<typename DType > | |

| void | ReadNetCDFFile (const std::string &fname, amrex::Vector< std::string > names, amrex::Vector< NDArray< DType > > &arrays, amrex::Vector< int > &success) |

| template<class FAB , typename DType > | |

| void | fill_fab_from_arrays (int iv, const amrex::Box &domain, amrex::Vector< NDArray< float >> &nc_arrays, const std::string &var_name, NC_Data_Dims_Type &NC_dim_type, FAB &temp) |

| template<class FAB , typename DType > | |

| void | BuildFABsFromNetCDFFile (const amrex::Box &domain, const std::string &fname, amrex::Vector< std::string > nc_var_names, amrex::Vector< enum NC_Data_Dims_Type > NC_dim_types, amrex::Vector< FAB * > fab_vars, amrex::Vector< int > &success) |

Typedef Documentation

◆ PlaneVector

| using PlaneVector = amrex::Vector<amrex::FArrayBox> |

Enumeration Type Documentation

◆ NC_Data_Dims_Type

|

strong |

Function Documentation

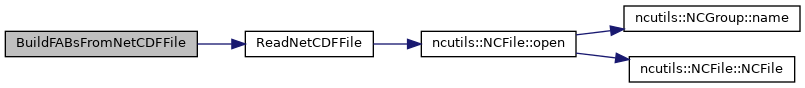

◆ BuildFABsFromNetCDFFile()

template<class FAB , typename DType >

| void BuildFABsFromNetCDFFile | ( | const amrex::Box & | domain, |

| const std::string & | fname, | ||

| amrex::Vector< std::string > | nc_var_names, | ||

| amrex::Vector< enum NC_Data_Dims_Type > | NC_dim_types, | ||

| amrex::Vector< FAB * > | fab_vars, | ||

| amrex::Vector< int > & | success | ||

| ) |

Function to read NetCDF variables and fill the corresponding Array4's

- Parameters

-

fname Name of the NetCDF file to be read nc_var_names Variable names in the NetCDF file NC_dim_types NetCDF data dimension types fab_vars Fab data we are to fill

390 fill_fab_from_arrays<FAB,DType>(iv, domain, nc_arrays, nc_var_names[iv], NC_dim_types[iv], tmp);

void ReadNetCDFFile(const std::string &fname, amrex::Vector< std::string > names, amrex::Vector< NDArray< DType > > &arrays, amrex::Vector< int > &success)

Definition: ERF_NCWpsFile.H:180

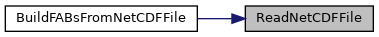

Here is the call graph for this function:

◆ BuildFABsFromWRFBdyFile()

| int BuildFABsFromWRFBdyFile | ( | const std::string & | fname, |

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | bdy_data_xlo, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | bdy_data_xhi, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | bdy_data_ylo, | ||

| amrex::Vector< amrex::Vector< amrex::FArrayBox >> & | bdy_data_yhi | ||

| ) |

◆ fill_fab_from_arrays()

template<class FAB , typename DType >

| void fill_fab_from_arrays | ( | int | iv, |

| const amrex::Box & | domain, | ||

| amrex::Vector< NDArray< float >> & | nc_arrays, | ||

| const std::string & | var_name, | ||

| NC_Data_Dims_Type & | NC_dim_type, | ||

| FAB & | temp | ||

| ) |

Helper function for reading data from NetCDF file into a provided FAB.

- Parameters

-

iv Index for which variable we are going to fill nc_arrays Arrays of data from NetCDF file var_name Variable name NC_dim_type Dimension type for the variable as stored in the NetCDF file temp FAB where we store the variable data from the NetCDF Arrays

273 int ns1, ns2, ns3; // bottom_top, south_north, west_east (these can be staggered or unstaggered)

311 amrex::Box in_box(amrex::IntVect(0,0,0), amrex::IntVect(ns3-1,ns2-1,ns1-1)); // ns1 may or may not be staggered

328 else if (var_name == "TSLB" || var_name == "SMOIS" || var_name == "SH2O" || var_name == "ZS" || var_name == "DZS") {

◆ ReadNetCDFFile()

template<typename DType >

| void ReadNetCDFFile | ( | const std::string & | fname, |

| amrex::Vector< std::string > | names, | ||

| amrex::Vector< NDArray< DType > > & | arrays, | ||

| amrex::Vector< int > & | success | ||

| ) |

210 vname_to_read = names[n+4]; // This allows us to read "T" instead -- we will over-write this later

AMREX_ALWAYS_ASSERT(bx.length()[2]==khi+1)

static NCFile open(const std::string &name, const int cmode=NC_NOWRITE)

Definition: ERF_NCInterface.cpp:707

Definition: ERF_NCWpsFile.H:52

Referenced by BuildFABsFromNetCDFFile().

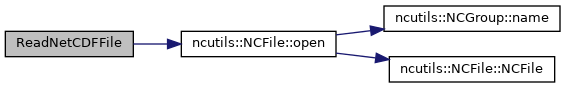

Here is the call graph for this function:

Here is the caller graph for this function:

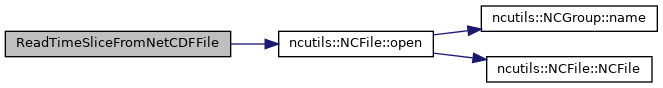

◆ ReadTimeSliceFromNetCDFFile()

template<typename DType >

| void ReadTimeSliceFromNetCDFFile | ( | const std::string & | fname, |

| const int | tidx, | ||

| amrex::Vector< std::string > | names, | ||

| amrex::Vector< NDArray< DType > > & | arrays, | ||

| amrex::Vector< int > & | success | ||

| ) |