499 const amrex::BoxArray m_pb_ba =

pb_ba[lev];

502 m_pb_mag[boxIdx] = 0.;

503 m_pb_dir[boxIdx] = 0.;

509 amrex::Vector<amrex::Real> avg_h(n_avg,0.);

510 amrex::Gpu::DeviceVector<amrex::Real> avg_d(n_avg,0.);

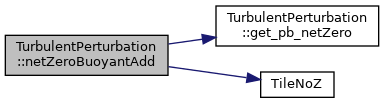

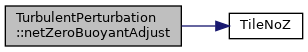

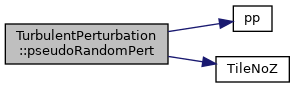

514 for (amrex::MFIter mfi(mf_cons,

TileNoZ()); mfi.isValid(); ++mfi) {

517 const amrex::Box& vbx = mfi.validbox();

520 auto ixtype_u = mf_xvel.boxArray().ixType();

521 amrex::Box vbx_u = amrex::convert(vbx,ixtype_u);

522 amrex::Box pbx_u = amrex::convert(m_pb_ba[boxIdx], ixtype_u);

523 amrex::Box ubx_u = pbx_u & vbx_u;

526 auto ixtype_v = mf_yvel.boxArray().ixType();

527 amrex::Box vbx_v = amrex::convert(vbx,ixtype_v);

528 amrex::Box pbx_v = amrex::convert(m_pb_ba[boxIdx], ixtype_v);

529 amrex::Box ubx_v = pbx_v & vbx_v;

533 const amrex::Array4<const amrex::Real>& xvel_arry = mf_xvel.const_array(mfi);

535 #ifdef USE_VOLUME_AVERAGE

537 ParallelFor(amrex::Gpu::KernelInfo().setReduction(

true), ubx_u, [=]

538 AMREX_GPU_DEVICE(

int i,

int j,

int k, amrex::Gpu::Handler

const& handler) noexcept {

539 amrex::Gpu::deviceReduceSum(&avg[0], xvel_arry(i,j,k)*norm, handler);

543 #ifdef USE_SLAB_AVERAGE

544 amrex::Box ubxSlab_lo = makeSlab(ubx_u,2,ubx_u.smallEnd(2));

545 amrex::Box ubxSlab_hi = makeSlab(ubx_u,2,ubx_u.bigEnd(2));

550 ParallelFor(amrex::Gpu::KernelInfo().setReduction(

true), ubxSlab_lo, [=]

551 AMREX_GPU_DEVICE(

int i,

int j,

int k, amrex::Gpu::Handler

const& handler) noexcept {

552 amrex::Gpu::deviceReduceSum(&avg[0], xvel_arry(i,j,k)*norm_lo, handler);

556 ParallelFor(amrex::Gpu::KernelInfo().setReduction(

true), ubxSlab_hi, [=]

557 AMREX_GPU_DEVICE(

int i,

int j,

int k, amrex::Gpu::Handler

const& handler) noexcept {

558 amrex::Gpu::deviceReduceSum(&avg[2], xvel_arry(i,j,k)*norm_hi, handler);

565 const amrex::Array4<const amrex::Real>& yvel_arry = mf_yvel.const_array(mfi);

567 #ifdef USE_VOLUME_AVERAGE

569 ParallelFor(amrex::Gpu::KernelInfo().setReduction(

true), ubx_v, [=]

570 AMREX_GPU_DEVICE(

int i,

int j,

int k, amrex::Gpu::Handler

const& handler) noexcept {

571 amrex::Gpu::deviceReduceSum(&avg[1], yvel_arry(i,j,k)*norm, handler);

575 #ifdef USE_SLAB_AVERAGE

576 amrex::Box ubxSlab_lo = makeSlab(ubx_v,2,ubx_v.smallEnd(2));

577 amrex::Box ubxSlab_hi = makeSlab(ubx_v,2,ubx_v.bigEnd(2));

582 ParallelFor(amrex::Gpu::KernelInfo().setReduction(

true), ubxSlab_lo, [=]

583 AMREX_GPU_DEVICE(

int i,

int j,

int k, amrex::Gpu::Handler

const& handler) noexcept {

584 amrex::Gpu::deviceReduceSum(&avg[1], yvel_arry(i,j,k)*norm_lo, handler);

588 ParallelFor(amrex::Gpu::KernelInfo().setReduction(

true), ubxSlab_hi, [=]

589 AMREX_GPU_DEVICE(

int i,

int j,

int k, amrex::Gpu::Handler

const& handler) noexcept {

590 amrex::Gpu::deviceReduceSum(&avg[3], yvel_arry(i,j,k)*norm_hi, handler);

597 amrex::Gpu::copy(amrex::Gpu::deviceToHost, avg_d.begin(), avg_d.end(), avg_h.begin());

600 #ifdef USE_VOLUME_AVERAGE

601 m_pb_mag[boxIdx] = sqrt(avg_h[0]*avg_h[0] + avg_h[1]*avg_h[1]);

605 #ifdef USE_SLAB_AVERAGE

606 m_pb_mag[boxIdx] = 0.5*( sqrt(avg_h[0]*avg_h[0] + avg_h[1]*avg_h[1])

607 + sqrt(avg_h[2]*avg_h[2] + avg_h[3]*avg_h[3]));

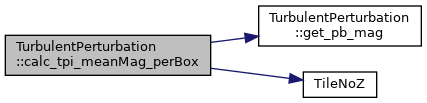

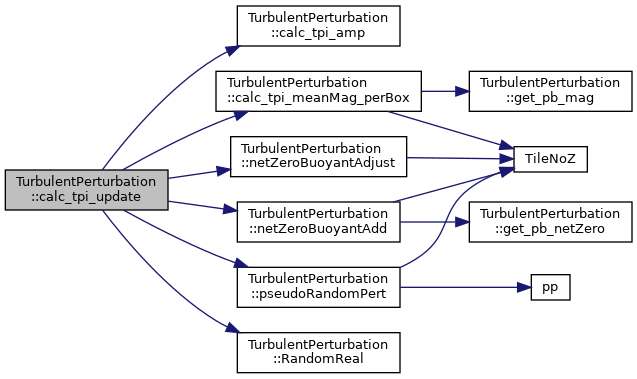

AMREX_FORCE_INLINE amrex::IntVect TileNoZ()

Definition: ERF_TileNoZ.H:11

real(c_double), parameter epsilon

Definition: ERF_module_model_constants.F90:12

amrex::Vector< amrex::Vector< amrex::Real > > pb_dir

Definition: ERF_TurbPertStruct.H:636