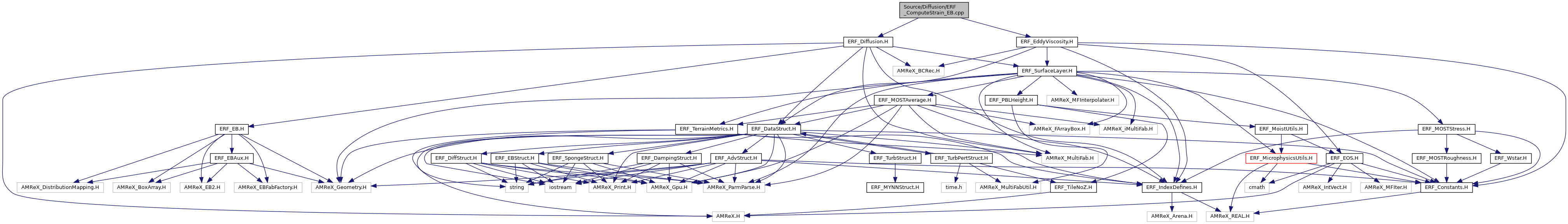

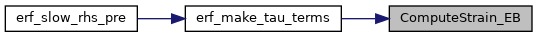

Function for computing the strain rates for EB.

45 Box domain_xy = convert(domain, tbxxy.ixType());

46 Box domain_xz = convert(domain, tbxxz.ixType());

47 Box domain_yz = convert(domain, tbxyz.ixType());

49 const auto&

dom_lo = lbound(domain);

50 const auto&

dom_hi = ubound(domain);

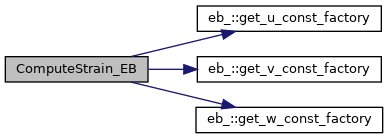

54 Array4<const EBCellFlag> u_cflag = (ebfact.

get_u_const_factory())->getMultiEBCellFlagFab()[mfi].const_array();

55 Array4<const EBCellFlag> v_cflag = (ebfact.

get_v_const_factory())->getMultiEBCellFlagFab()[mfi].const_array();

56 Array4<const EBCellFlag> w_cflag = (ebfact.

get_w_const_factory())->getMultiEBCellFlagFab()[mfi].const_array();

62 xl_v_dir = ( xl_v_dir && (tbxxy.smallEnd(0) == domain_xy.smallEnd(0)) );

67 xh_v_dir = ( xh_v_dir && (tbxxy.bigEnd(0) == domain_xy.bigEnd(0)) );

72 xl_w_dir = ( xl_w_dir && (tbxxz.smallEnd(0) == domain_xz.smallEnd(0)) );

77 xh_w_dir = ( xh_w_dir && (tbxxz.bigEnd(0) == domain_xz.bigEnd(0)) );

83 yl_u_dir = ( yl_u_dir && (tbxxy.smallEnd(1) == domain_xy.smallEnd(1)) );

88 yh_u_dir = ( yh_u_dir && (tbxxy.bigEnd(1) == domain_xy.bigEnd(1)) );

93 yl_w_dir = ( yl_w_dir && (tbxyz.smallEnd(1) == domain_yz.smallEnd(1)) );

98 yh_w_dir = ( yh_w_dir && (tbxyz.bigEnd(1) == domain_yz.bigEnd(1)) );

103 zl_u_dir = ( zl_u_dir && (tbxxz.smallEnd(2) == domain_xz.smallEnd(2)) );

107 zh_u_dir = ( zh_u_dir && (tbxxz.bigEnd(2) == domain_xz.bigEnd(2)) );

111 zl_v_dir = ( zl_v_dir && (tbxyz.smallEnd(2) == domain_yz.smallEnd(2)) );

115 zh_v_dir = ( zh_v_dir && (tbxyz.bigEnd(2) == domain_yz.bigEnd(2)) );

121 Box planexy = tbxxy; planexy.setBig(0, planexy.smallEnd(0) );

125 ParallelFor(planexy,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

126 if (!need_to_test || u(

dom_lo.x,j,k) >= 0.) {

127 tau12(i,j,k) = 0.5 * ( (u(i, j, k) - u(i, j-1, k))*dxInv[1]

128 + (-(8./3.) * v(i-1,j,k) + 3. * v(i,j,k) - (1./3.) * v(i+1,j,k))*dxInv[0] );

130 tau12(i,j,k) = 0.5 * ( (u(i, j, k) - u(i, j-1, k))*dxInv[1] +

131 (v(i, j, k) - v(i-1, j, k))*dxInv[0] );

137 Box planexy = tbxxy; planexy.setSmall(0, planexy.bigEnd(0) );

141 ParallelFor(planexy,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

142 if (!need_to_test || u(

dom_hi.x+1,j,k) <= 0.) {

143 tau12(i,j,k) = 0.5 * ( (u(i, j, k) - u(i, j-1, k))*dxInv[1]

144 - (-(8./3.) * v(i,j,k) + 3. * v(i-1,j,k) - (1./3.) * v(i-2,j,k))*dxInv[0] );

146 tau12(i,j,k) = 0.5 * ( (u(i, j, k) - u(i, j-1, k))*dxInv[1] +

147 (v(i, j, k) - v(i-1, j, k))*dxInv[0] );

153 Box planexz = tbxxz; planexz.setBig(0, planexz.smallEnd(0) );

157 ParallelFor(planexz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

158 Real du_dz = (u(i, j, k) - u(i, j, k-1))*

dxInv[2];

159 if (!need_to_test || u(

dom_lo.x,j,k) >= 0.) {

160 tau13(i,j,k) = 0.5 * ( du_dz

161 + (-(8./3.) * w(i-1,j,k) + 3. * w(i,j,k) - (1./3.) * w(i+1,j,k))*

dxInv[0] );

163 tau13(i,j,k) = 0.5 * ( du_dz

164 + (w(i, j, k) - w(i-1, j, k))*

dxInv[0] );

167 if (tau13i) tau13i(i,j,k) = 0.5 * du_dz;

172 Box planexz = tbxxz; planexz.setSmall(0, planexz.bigEnd(0) );

176 ParallelFor(planexz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

177 Real du_dz = (u(i, j, k) - u(i, j, k-1))*

dxInv[2];

178 if (!need_to_test || u(

dom_hi.x+1,j,k) <= 0.) {

179 tau13(i,j,k) = 0.5 * ( du_dz

180 - (-(8./3.) * w(i,j,k) + 3. * w(i-1,j,k) - (1./3.) * w(i-2,j,k))*

dxInv[0] );

182 tau13(i,j,k) = 0.5 * ( du_dz

183 + (w(i, j, k) - w(i-1, j, k))*

dxInv[0] );

186 if (tau13i) tau13i(i,j,k) = 0.5 * du_dz;

194 Box planexy = tbxxy; planexy.setBig(1, planexy.smallEnd(1) );

198 ParallelFor(planexy,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

199 if (!need_to_test || v(i,

dom_lo.y,k) >= 0.) {

200 tau12(i,j,k) = 0.5 * ( (-(8./3.) * u(i,j-1,k) + 3. * u(i,j,k) - (1./3.) * u(i,j+1,k))*dxInv[1]

201 + (v(i, j, k) - v(i-1, j, k))*dxInv[0] );

203 tau12(i,j,k) = 0.5 * ( (u(i, j, k) - u(i, j-1, k))*dxInv[1]

204 + (v(i, j, k) - v(i-1, j, k))*dxInv[0] );

210 Box planexy = tbxxy; planexy.setSmall(1, planexy.bigEnd(1) );

214 ParallelFor(planexy,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

215 if (!need_to_test || v(i,

dom_hi.y+1,k) <= 0.) {

216 tau12(i,j,k) = 0.5 * ( -(-(8./3.) * u(i,j,k) + 3. * u(i,j-1,k) - (1./3.) * u(i,j-2,k))*dxInv[1]

217 + (v(i, j, k) - v(i-1, j, k))*dxInv[0] );

219 tau12(i,j,k) = 0.5 * ( (u(i, j, k) - u(i, j-1, k))*dxInv[1]

220 + (v(i, j, k) - v(i-1, j, k))*dxInv[0] );

226 Box planeyz = tbxyz; planeyz.setBig(1, planeyz.smallEnd(1) );

230 ParallelFor(planeyz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

231 Real dv_dz = (v(i, j, k) - v(i, j, k-1))*

dxInv[2];

232 if (!need_to_test || v(i,

dom_lo.y,k) >= 0.) {

233 tau23(i,j,k) = 0.5 * ( dv_dz

234 + (-(8./3.) * w(i,j-1,k) + 3. * w(i,j ,k) - (1./3.) * w(i,j+1,k))*

dxInv[1] );

236 tau23(i,j,k) = 0.5 * ( dv_dz

237 + (w(i, j, k) - w(i, j-1, k))*

dxInv[1] );

240 if (tau23i) tau23i(i,j,k) = 0.5 * dv_dz;

245 Box planeyz = tbxyz; planeyz.setSmall(1, planeyz.bigEnd(1) );

249 ParallelFor(planeyz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

250 Real dv_dz = (v(i, j, k) - v(i, j, k-1))*

dxInv[2];

251 if (!need_to_test || v(i,

dom_hi.y+1,k) <= 0.) {

252 tau23(i,j,k) = 0.5 * ( dv_dz

253 - (-(8./3.) * w(i,j ,k) + 3. * w(i,j-1,k) - (1./3.) * w(i,j-2,k))*

dxInv[1] );

255 tau23(i,j,k) = 0.5 * ( dv_dz

256 + (w(i, j, k) - w(i, j-1, k))*

dxInv[1] );

259 if (tau23i) tau23i(i,j,k) = 0.5 * dv_dz;

267 Box planexz = tbxxz; planexz.setBig(2, planexz.smallEnd(2) );

270 ParallelFor(planexz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

271 Real du_dz = (-(8./3.) * u(i,j,k-1) + 3. * u(i,j,k) - (1./3.) * u(i,j,k+1))*

dxInv[2];

272 tau13(i,j,k) = 0.5 * ( du_dz

273 + (w(i, j, k) - w(i-1, j, k))*

dxInv[0] );

275 if (tau13i) tau13i(i,j,k) = 0.5 * du_dz;

280 Box planexz = tbxxz; planexz.setSmall(2, planexz.bigEnd(2) );

283 ParallelFor(planexz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

284 Real du_dz = -(-(8./3.) * u(i,j,k) + 3. * u(i,j,k-1) - (1./3.) * u(i,j,k-2))*

dxInv[2];

285 tau13(i,j,k) = 0.5 * ( du_dz

286 + (w(i, j, k) - w(i-1, j, k))*

dxInv[0] );

288 if (tau13i) tau13i(i,j,k) = 0.5 * du_dz;

293 Box planeyz = tbxyz; planeyz.setBig(2, planeyz.smallEnd(2) );

296 ParallelFor(planeyz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

297 Real dv_dz = (-(8./3.) * v(i,j,k-1) + 3. * v(i,j,k ) - (1./3.) * v(i,j,k+1))*

dxInv[2];

298 tau23(i,j,k) = 0.5 * ( dv_dz

299 + (w(i, j, k) - w(i, j-1, k))*

dxInv[1] );

301 if (tau23i) tau23i(i,j,k) = 0.5 * dv_dz;

306 Box planeyz = tbxyz; planeyz.setSmall(2, planeyz.bigEnd(2) );

309 ParallelFor(planeyz,[=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

310 Real dv_dz = -(-(8./3.) * v(i,j,k ) + 3. * v(i,j,k-1) - (1./3.) * v(i,j,k-2))*

dxInv[2];

311 tau23(i,j,k) = 0.5 * ( dv_dz

312 + (w(i, j, k) - w(i, j-1, k))*

dxInv[1] );

314 if (tau23i) tau23i(i,j,k) = 0.5 * dv_dz;

321 ParallelFor(bxcc, [=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

324 if (u_cflag(i+1,j,k).isCovered() && u_cflag(i,j,k).isSingleValued()) {

325 du_dx = ( 2.0*u(i, j, k) - 3.0*u(i-1, j, k) + u(i-2, j, k))*

dxInv[0];

326 }

else if (u_cflag(i,j,k).isCovered() && u_cflag(i+1,j,k).isSingleValued()) {

327 du_dx = (- 2.0*u(i+1, j, k) + 3.0*u(i+2, j, k) - u(i+3, j, k))*

dxInv[0];

329 du_dx = (u(i+1, j, k) - u(i, j, k))*

dxInv[0];

333 if (v_cflag(i,j+1,k).isCovered() && v_cflag(i,j,k).isSingleValued()) {

334 dv_dy = ( 2.0*v(i, j, k) - 3.0*v(i, j-1, k) + v(i, j-2, k))*

dxInv[1];

335 }

else if (v_cflag(i,j,k).isCovered() && v_cflag(i,j+1,k).isSingleValued()) {

336 dv_dy = (- 2.0*v(i, j+1, k) + 3.0*v(i, j+2, k) - v(i, j+3, k))*

dxInv[1];

338 dv_dy = (v(i, j+1, k) - v(i, j, k))*

dxInv[1];

342 if (w_cflag(i,j,k+1).isCovered() && w_cflag(i,j,k).isSingleValued()) {

343 dw_dz = ( 2.0*w(i, j, k) - 3.0*w(i, j, k-1) + w(i, j, k-2))*

dxInv[2];

344 }

else if (w_cflag(i,j,k).isCovered() && w_cflag(i,j,k+1).isSingleValued()) {

345 dw_dz = (- 2.0*w(i, j, k+1) + 3.0*w(i, j, k+2) - w(i, j, k+3))*

dxInv[2];

347 dw_dz = (w(i, j, k+1) - w(i, j, k))*

dxInv[2];

350 tau11(i,j,k) = du_dx;

351 tau22(i,j,k) = dv_dy;

352 tau33(i,j,k) = dw_dz;

357 [=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

360 if (u_cflag(i,j,k).isCovered() && u_cflag(i,j-1,k).isSingleValued()) {

361 du_dy = ( 2.0*u(i, j-1, k) - 3.0*u(i, j-2, k) + u(i, j-3, k))*

dxInv[1];

362 }

else if (u_cflag(i,j-1,k).isCovered() && u_cflag(i,j,k).isSingleValued()) {

363 du_dy = (- 2.0*u(i, j, k) + 3.0*u(i, j+1, k) - u(i, j+2, k))*

dxInv[1];

365 du_dy = (u(i, j, k) - u(i, j-1, k))*

dxInv[1];

369 if (v_cflag(i,j,k).isCovered() && v_cflag(i-1,j,k).isSingleValued()) {

370 dv_dx = ( 2.0*v(i-1, j, k) - 3.0*v(i-2, j, k) + v(i-3, j, k))*

dxInv[0];

371 }

else if (v_cflag(i-1,j,k).isCovered() && v_cflag(i,j,k).isSingleValued()) {

372 dv_dx = (- 2.0*v(i, j, k) + 3.0*v(i+1, j, k) - v(i+2, j, k))*

dxInv[0];

374 dv_dx = (v(i, j, k) - v(i-1, j, k))*

dxInv[0];

377 tau12(i,j,k) = 0.5 * ( du_dy + dv_dx );

379 [=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

382 if (u_cflag(i,j,k).isCovered() && u_cflag(i,j,k-1).isSingleValued()) {

383 du_dz = ( 2.0*u(i, j, k-1) - 3.0*u(i, j, k-2) + u(i, j, k-3))*

dxInv[2];

384 }

else if (u_cflag(i,j,k-1).isCovered() && u_cflag(i,j,k).isSingleValued()) {

385 du_dz = (- 2.0*u(i, j, k) + 3.0*u(i, j, k+1) - u(i, j, k+2))*

dxInv[2];

387 du_dz = (u(i, j, k) - u(i, j, k-1))*

dxInv[2];

391 if (w_cflag(i,j,k).isCovered() && w_cflag(i-1,j,k).isSingleValued()) {

392 dw_dx = ( 2.0*w(i-1, j, k) - 3.0*w(i-2, j, k) + w(i-3, j, k))*

dxInv[0];

393 }

else if (w_cflag(i-1,j,k).isCovered() && w_cflag(i,j,k).isSingleValued()) {

394 dw_dx = (- 2.0*w(i, j, k) + 3.0*w(i+1, j, k) - w(i+2, j, k))*

dxInv[0];

396 dw_dx = (w(i, j, k) - w(i-1, j, k))*

dxInv[0];

399 tau13(i,j,k) = 0.5 * ( du_dz + dw_dx );

401 if (tau13i) tau13i(i,j,k) = 0.5 * du_dz;

403 [=] AMREX_GPU_DEVICE (

int i,

int j,

int k) noexcept {

406 if (v_cflag(i,j,k).isCovered() && v_cflag(i,j,k-1).isSingleValued()) {

407 dv_dz = ( 2.0*v(i, j, k-1) - 3.0*v(i, j, k-2) + v(i, j, k-3))*

dxInv[2];

408 }

else if (v_cflag(i,j,k-1).isCovered() && v_cflag(i,j,k).isSingleValued()) {

409 dv_dz = (- 2.0*v(i, j, k) + 3.0*v(i, j, k+1) - v(i, j, k+2))*

dxInv[2];

411 dv_dz = (v(i, j, k) - v(i, j, k-1))*

dxInv[2];

415 if (w_cflag(i,j,k).isCovered() && w_cflag(i,j-1,k).isSingleValued()) {

416 dw_dy = ( 2.0*w(i, j-1, k) - 3.0*w(i, j-2, k) + w(i, j-3, k))*

dxInv[1];

417 }

else if (w_cflag(i,j-1,k).isCovered() && w_cflag(i,j,k).isSingleValued()) {

418 dw_dy = (- 2.0*w(i, j, k) + 3.0*w(i, j+1, k) - w(i, j+2, k))*

dxInv[1];

420 dw_dy = (w(i, j, k) - w(i, j-1, k))*

dxInv[1];

424 tau23(i,j,k) = 0.5 * ( dv_dz + dw_dy );

426 if (tau23i) tau23i(i,j,k) = 0.5 * dv_dz;

@ tau12

Definition: ERF_DataStruct.H:32

@ tau23

Definition: ERF_DataStruct.H:32

@ tau33

Definition: ERF_DataStruct.H:32

@ tau22

Definition: ERF_DataStruct.H:32

@ tau11

Definition: ERF_DataStruct.H:32

@ tau13

Definition: ERF_DataStruct.H:32

amrex::GpuArray< Real, AMREX_SPACEDIM > dxInv

Definition: ERF_InitCustomPertVels_ParticleTests.H:16

ParallelFor(bx, [=] AMREX_GPU_DEVICE(int i, int j, int k) noexcept { const auto prob_lo=geomdata.ProbLo();const auto dx=geomdata.CellSize();const Real x=(prob_lo[0]+(i+0.5) *dx[0])/mf_m(i, j, 0);const Real z=z_cc(i, j, k);Real L=std::sqrt(std::pow((x - x_c)/x_r, 2)+std::pow((z - z_c)/z_r, 2));if(L<=1.0) { Real dT=T_pert *(std::cos(PI *L)+1.0)/2.0;Real Tbar_hse=p_hse(i, j, k)/(R_d *r_hse(i, j, k));Real theta_perturbed=(Tbar_hse+dT) *std::pow(p_0/p_hse(i, j, k), rdOcp);Real theta_0=(Tbar_hse) *std::pow(p_0/p_hse(i, j, k), rdOcp);if(const_rho) { state_pert(i, j, k, RhoTheta_comp)=r_hse(i, j, k) *(theta_perturbed - theta_0);} else { state_pert(i, j, k, Rho_comp)=getRhoThetagivenP(p_hse(i, j, k))/theta_perturbed - r_hse(i, j, k);} } })

const auto & dom_hi

Definition: ERF_SetupVertDiff.H:2

const auto & dom_lo

Definition: ERF_SetupVertDiff.H:1

amrex::Real Real

Definition: ERF_ShocInterface.H:19

eb_aux_ const * get_w_const_factory() const noexcept

Definition: ERF_EB.H:52

eb_aux_ const * get_v_const_factory() const noexcept

Definition: ERF_EB.H:51

eb_aux_ const * get_u_const_factory() const noexcept

Definition: ERF_EB.H:50

@ zvel_bc

Definition: ERF_IndexDefines.H:89

@ yvel_bc

Definition: ERF_IndexDefines.H:88

@ xvel_bc

Definition: ERF_IndexDefines.H:87

@ ext_dir_ingested

Definition: ERF_IndexDefines.H:212

@ ext_dir

Definition: ERF_IndexDefines.H:209

@ ext_dir_upwind

Definition: ERF_IndexDefines.H:217