ERF_MakeTauTerms.cpp File Reference

#include "AMReX_ArrayLim.H"#include "AMReX_BCRec.H"#include "AMReX_GpuContainers.H"#include "ERF_TI_slow_headers.H"#include "ERF_EOS.H"#include "ERF_Utils.H"

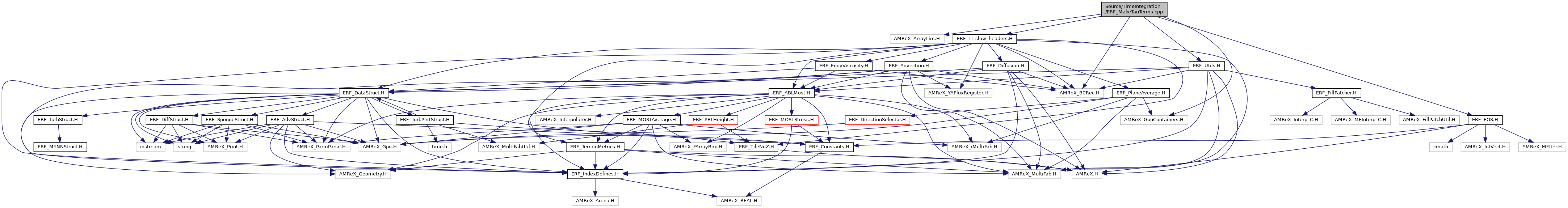

Include dependency graph for ERF_MakeTauTerms.cpp:

Functions | |

| void | erf_make_tau_terms (int level, int nrk, const Vector< BCRec > &domain_bcs_type_h, const MultiFab &z_phys_nd, Vector< MultiFab > &S_data, const MultiFab &xvel, const MultiFab &yvel, const MultiFab &zvel, Vector< std::unique_ptr< MultiFab >> &Tau_lev, Vector< std::unique_ptr< MultiFab >> &Tau_corr_lev, MultiFab *SmnSmn, MultiFab *eddyDiffs, const Geometry geom, const SolverChoice &solverChoice, std::unique_ptr< SurfaceLayer > &, Gpu::DeviceVector< Real > &stretched_dz_d, const MultiFab &detJ, Vector< std::unique_ptr< MultiFab >> &mapfac, const MultiFab &ax, const MultiFab &ay, const MultiFab &az, const eb_ &ebfact) |

| void | copy_surface_tau_for_implicit (Vector< std::unique_ptr< MultiFab >> &Tau_lev, Vector< std::unique_ptr< MultiFab >> &Tau_corr_lev) |

Function Documentation

◆ copy_surface_tau_for_implicit()

| void copy_surface_tau_for_implicit | ( | Vector< std::unique_ptr< MultiFab >> & | Tau_lev, |

| Vector< std::unique_ptr< MultiFab >> & | Tau_corr_lev | ||

| ) |

ParallelFor(bx, [=] AMREX_GPU_DEVICE(int i, int j, int k) noexcept { const auto prob_lo=geomdata.ProbLo();const auto dx=geomdata.CellSize();const Real x=(prob_lo[0]+(i+0.5) *dx[0])/mf_m(i, j, 0);const Real z=z_cc(i, j, k);Real L=std::sqrt(std::pow((x - x_c)/x_r, 2)+std::pow((z - z_c)/z_r, 2));if(L<=1.0) { Real dT=T_pert *(std::cos(PI *L)+1.0)/2.0;Real Tbar_hse=p_hse(i, j, k)/(R_d *r_hse(i, j, k));Real theta_perturbed=(Tbar_hse+dT) *std::pow(p_0/p_hse(i, j, k), rdOcp);Real theta_0=(Tbar_hse) *std::pow(p_0/p_hse(i, j, k), rdOcp);if(const_rho) { state_pert(i, j, k, RhoTheta_comp)=r_hse(i, j, k) *(theta_perturbed - theta_0);} else { state_pert(i, j, k, Rho_comp)=getRhoThetagivenP(p_hse(i, j, k))/theta_perturbed - r_hse(i, j, k);} } })

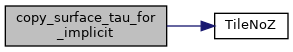

Here is the call graph for this function:

◆ erf_make_tau_terms()

| void erf_make_tau_terms | ( | int | level, |

| int | nrk, | ||

| const Vector< BCRec > & | domain_bcs_type_h, | ||

| const MultiFab & | z_phys_nd, | ||

| Vector< MultiFab > & | S_data, | ||

| const MultiFab & | xvel, | ||

| const MultiFab & | yvel, | ||

| const MultiFab & | zvel, | ||

| Vector< std::unique_ptr< MultiFab >> & | Tau_lev, | ||

| Vector< std::unique_ptr< MultiFab >> & | Tau_corr_lev, | ||

| MultiFab * | SmnSmn, | ||

| MultiFab * | eddyDiffs, | ||

| const Geometry | geom, | ||

| const SolverChoice & | solverChoice, | ||

| std::unique_ptr< SurfaceLayer > & | , | ||

| Gpu::DeviceVector< Real > & | stretched_dz_d, | ||

| const MultiFab & | detJ, | ||

| Vector< std::unique_ptr< MultiFab >> & | mapfac, | ||

| const MultiFab & | ax, | ||

| const MultiFab & | ay, | ||

| const MultiFab & | az, | ||

| const eb_ & | ebfact | ||

| ) |

58 const bool do_implicit = (solverChoice.vert_implicit_fac[nrk] > 0) && solverChoice.implicit_momentum_diffusion;

108 const Array4<Real const>& cell_data = l_use_constAlpha ? S_data[IntVars::cons].const_array(mfi) :

188 S11.resize( bxcc,1,The_Async_Arena()); S22.resize( bxcc,1,The_Async_Arena()); S33.resize( bxcc,1,The_Async_Arena());

189 S12.resize(tbxxy,1,The_Async_Arena()); S13.resize(tbxxz,1,The_Async_Arena()); S23.resize(tbxyz,1,The_Async_Arena());

192 Array4<Real> tau11 = Tau_lev[TauType::tau11]->array(mfi); Array4<Real> tau22 = Tau_lev[TauType::tau22]->array(mfi);

193 Array4<Real> tau33 = Tau_lev[TauType::tau33]->array(mfi); Array4<Real> tau12 = Tau_lev[TauType::tau12]->array(mfi);

194 Array4<Real> tau13 = Tau_lev[TauType::tau13]->array(mfi); Array4<Real> tau23 = Tau_lev[TauType::tau23]->array(mfi);

253 S21.resize(tbxxy,1,The_Async_Arena()); S31.resize(tbxxz,1,The_Async_Arena()); S32.resize(tbxyz,1,The_Async_Arena());

267 er_arr(i,j,k) = (u(i+1, j , k )/mf_uy(i+1,j,0) - u(i, j, k)/mf_uy(i,j,0))*dxInv[0] * mfsq + // == du / (dη/dy) * (1/dξ) * (dξ/dx)*(dη/dy) = du/dx

268 (v(i , j+1, k )/mf_vx(i,j+1,0) - v(i, j, k)/mf_vx(i,j,0))*dxInv[1] * mfsq + // == dv / (dξ/dx) * (1/dη) * (dξ/dx)*(dη/dy) = dv/dy

371 S21.resize(tbxxy,1,The_Async_Arena()); S31.resize(tbxxz,1,The_Async_Arena()); S32.resize(tbxyz,1,The_Async_Arena());

412 Real expansionRate = (u(i+1,j ,k)/mf_uy(i+1,j,0)*met_u_h_zeta_hi - u(i,j,k)/mf_uy(i,j,0)*met_u_h_zeta_lo)*dxInv[0]*mfsq +

413 (v(i ,j+1,k)/mf_vx(i,j+1,0)*met_v_h_zeta_hi - v(i,j,k)/mf_vx(i,j,0)*met_v_h_zeta_lo)*dxInv[1]*mfsq +

void ComputeStrain_EB(const MFIter &mfi, Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Box domain, const Array4< const Real > &u, const Array4< const Real > &v, const Array4< const Real > &w, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau13, Array4< Real > &tau23, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const BCRec *bc_ptr, const eb_ &ebfact, Array4< Real > &tau13i, Array4< Real > &tau23i)

Definition: ERF_ComputeStrain_EB.cpp:28

void ComputeStrain_N(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Box domain, const Array4< const Real > &u, const Array4< const Real > &v, const Array4< const Real > &w, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau13, Array4< Real > &tau23, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, const BCRec *bc_ptr, Array4< Real > &tau13i, Array4< Real > &tau23i)

Definition: ERF_ComputeStrain_N.cpp:31

void ComputeStrain_S(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Box domain, const Array4< const Real > &u, const Array4< const Real > &v, const Array4< const Real > &w, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau21, Array4< Real > &tau13, Array4< Real > &tau31, Array4< Real > &tau23, Array4< Real > &tau32, const Gpu::DeviceVector< Real > &stretched_dz_d, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, const BCRec *bc_ptr, Array4< Real > &tau13i, Array4< Real > &tau23i)

Definition: ERF_ComputeStrain_S.cpp:39

void ComputeStrain_T(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Box domain, const Array4< const Real > &u, const Array4< const Real > &v, const Array4< const Real > &w, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau21, Array4< Real > &tau13, Array4< Real > &tau31, Array4< Real > &tau23, Array4< Real > &tau32, const Array4< const Real > &z_nd, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, const BCRec *bc_ptr, Array4< Real > &tau13i, Array4< Real > &tau23i)

Definition: ERF_ComputeStrain_T.cpp:39

void ComputeStressConsVisc_EB(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau13, Array4< Real > &tau23, const Array4< const Real > &er_arr, Array4< const Real > &vfrac, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_EB.cpp:26

void ComputeStressConsVisc_N(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau13, Array4< Real > &tau23, const Array4< const Real > &er_arr, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_N.cpp:26

void ComputeStressVarVisc_N(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &mu_turb, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau13, Array4< Real > &tau23, const Array4< const Real > &er_arr, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_N.cpp:126

void ComputeStressVarVisc_S(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &mu_turb, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau21, Array4< Real > &tau13, Array4< Real > &tau31, Array4< Real > &tau23, Array4< Real > &tau32, const Array4< const Real > &er_arr, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_S.cpp:162

void ComputeStressConsVisc_S(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau21, Array4< Real > &tau13, Array4< Real > &tau31, Array4< Real > &tau23, Array4< Real > &tau32, const Array4< const Real > &er_arr, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_S.cpp:32

void ComputeStressVarVisc_T(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &mu_turb, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau21, Array4< Real > &tau13, Array4< Real > &tau31, Array4< Real > &tau23, Array4< Real > &tau32, const Array4< const Real > &er_arr, const Array4< const Real > &z_nd, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_T.cpp:363

void ComputeStressConsVisc_T(Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Real mu_eff, const Array4< const Real > &cell_data, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau21, Array4< Real > &tau13, Array4< Real > &tau31, Array4< Real > &tau23, Array4< Real > &tau32, const Array4< const Real > &er_arr, const Array4< const Real > &z_nd, const Array4< const Real > &detJ, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, Array4< Real > &tau13i, Array4< Real > &tau23i, Array4< Real > &tau33i)

Definition: ERF_ComputeStress_T.cpp:32

@ None

@ ConstantAlpha

AMREX_GPU_DEVICE AMREX_FORCE_INLINE amrex::Real ComputeSmnSmn(int &i, int &j, int &k, const amrex::Array4< amrex::Real const > &tau11, const amrex::Array4< amrex::Real const > &tau22, const amrex::Array4< amrex::Real const > &tau33, const amrex::Array4< amrex::Real const > &tau12, const amrex::Array4< amrex::Real const > &tau13, const amrex::Array4< amrex::Real const > &tau23)

Definition: ERF_EddyViscosity.H:63

amrex::GpuArray< Real, AMREX_SPACEDIM > dxInv

Definition: ERF_InitCustomPertVels_ParticleTests.H:16

AMREX_ALWAYS_ASSERT(bx.length()[2]==khi+1)

AMREX_GPU_DEVICE AMREX_FORCE_INLINE amrex::Real OmegaFromW(int &i, int &j, int &k, amrex::Real w, const amrex::Array4< const amrex::Real > &u_arr, const amrex::Array4< const amrex::Real > &v_arr, const amrex::Array4< const amrex::Real > &mf_u, const amrex::Array4< const amrex::Real > &mf_v, const amrex::Array4< const amrex::Real > &z_nd, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > &dxInv)

Definition: ERF_TerrainMetrics.H:412

AMREX_GPU_DEVICE AMREX_FORCE_INLINE amrex::Real Compute_h_zeta_AtIface(const int &i, const int &j, const int &k, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > &cellSizeInv, const amrex::Array4< const amrex::Real > &z_nd)

Definition: ERF_TerrainMetrics.H:102

AMREX_GPU_DEVICE AMREX_FORCE_INLINE amrex::Real Compute_h_zeta_AtJface(const int &i, const int &j, const int &k, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > &cellSizeInv, const amrex::Array4< const amrex::Real > &z_nd)

Definition: ERF_TerrainMetrics.H:142

const std::unique_ptr< amrex::EBFArrayBoxFactory > & get_const_factory() const noexcept

Definition: ERF_EB.H:46

Definition: ERF_DiffStruct.H:19

amrex::Vector< amrex::Real > vert_implicit_fac

Definition: ERF_DataStruct.H:1102

bool implicit_momentum_diffusion

Definition: ERF_DataStruct.H:1105

Definition: ERF_TurbStruct.H:42

Referenced by erf_slow_rhs_pre().

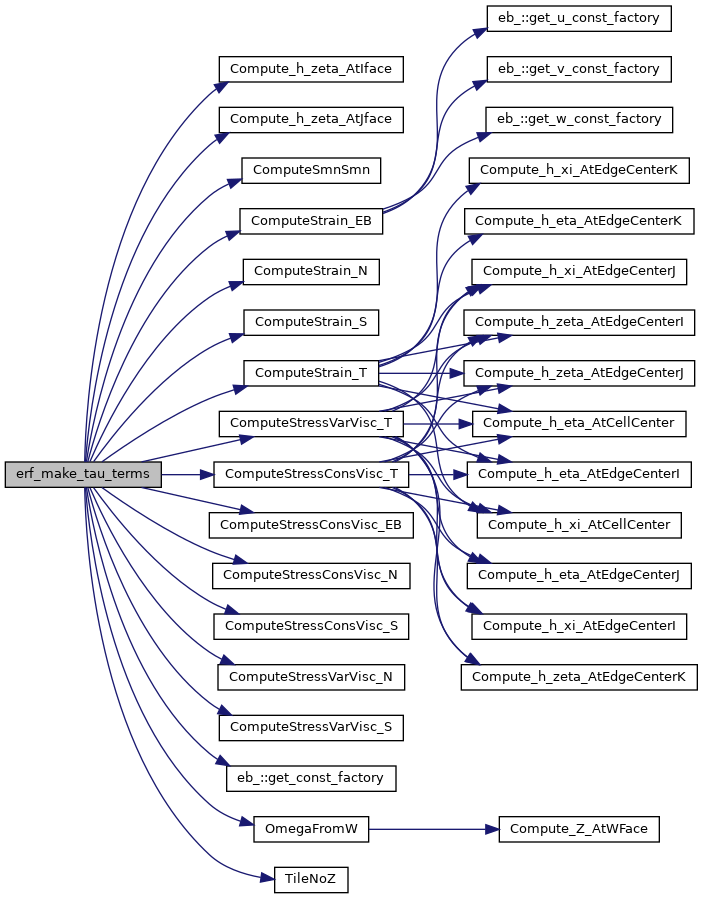

Here is the call graph for this function:

Here is the caller graph for this function: