ERF_ComputeStrain_N.cpp File Reference

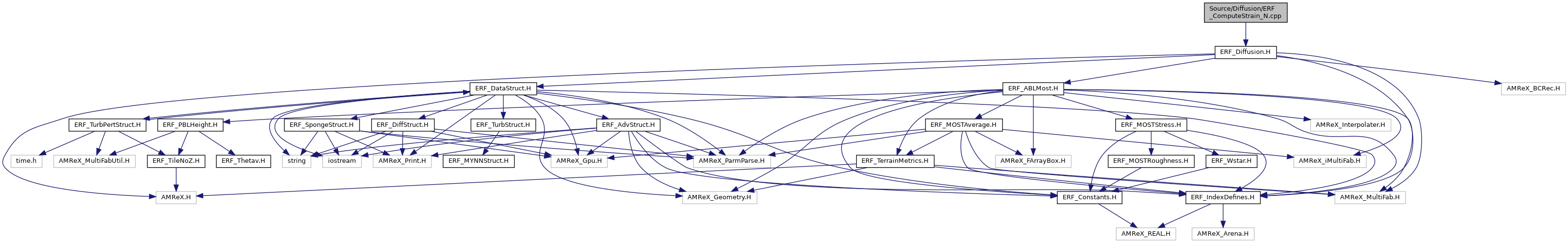

Include dependency graph for ERF_ComputeStrain_N.cpp:

Functions | |

| void | ComputeStrain_N (Box bxcc, Box tbxxy, Box tbxxz, Box tbxyz, Box domain, const Array4< const Real > &u, const Array4< const Real > &v, const Array4< const Real > &w, Array4< Real > &tau11, Array4< Real > &tau22, Array4< Real > &tau33, Array4< Real > &tau12, Array4< Real > &tau13, Array4< Real > &tau23, const GpuArray< Real, AMREX_SPACEDIM > &dxInv, const Array4< const Real > &mf_mx, const Array4< const Real > &mf_ux, const Array4< const Real > &mf_vx, const Array4< const Real > &mf_my, const Array4< const Real > &mf_uy, const Array4< const Real > &mf_vy, const BCRec *bc_ptr, Array4< Real > &tau13i, Array4< Real > &tau23i) |

Function Documentation

◆ ComputeStrain_N()

| void ComputeStrain_N | ( | Box | bxcc, |

| Box | tbxxy, | ||

| Box | tbxxz, | ||

| Box | tbxyz, | ||

| Box | domain, | ||

| const Array4< const Real > & | u, | ||

| const Array4< const Real > & | v, | ||

| const Array4< const Real > & | w, | ||

| Array4< Real > & | tau11, | ||

| Array4< Real > & | tau22, | ||

| Array4< Real > & | tau33, | ||

| Array4< Real > & | tau12, | ||

| Array4< Real > & | tau13, | ||

| Array4< Real > & | tau23, | ||

| const GpuArray< Real, AMREX_SPACEDIM > & | dxInv, | ||

| const Array4< const Real > & | mf_mx, | ||

| const Array4< const Real > & | mf_ux, | ||

| const Array4< const Real > & | mf_vx, | ||

| const Array4< const Real > & | mf_my, | ||

| const Array4< const Real > & | mf_uy, | ||

| const Array4< const Real > & | mf_vy, | ||

| const BCRec * | bc_ptr, | ||

| Array4< Real > & | tau13i, | ||

| Array4< Real > & | tau23i | ||

| ) |

Function for computing the strain rates without terrain.

- Parameters

-

[in] bxcc cell center box for tau_ii [in] tbxxy nodal xy box for tau_12 [in] tbxxz nodal xz box for tau_13 [in] tbxyz nodal yz box for tau_23 [in] u x-direction velocity [in] v y-direction velocity [in] w z-direction velocity [out] tau11 11 strain [out] tau22 22 strain [out] tau33 33 strain [out] tau12 12 strain [out] tau13 13 strain [out] tau23 23 strain [in] bc_ptr container with boundary condition types [in] dxInv inverse cell size array [in] mf_m map factor at cell center [in] mf_u map factor at x-face [in] mf_v map factor at y-face [in] tau13i contribution to strain from du/dz [in] tau23i contribution to strain from dv/dz

124 bool need_to_test = (bc_ptr[BCVars::yvel_bc].lo(0) == ERFBCType::ext_dir_upwind) ? true : false;

139 // note: tilebox xy should be nodal, so i|i-1|i-2 at the bigEnd is analogous to i-1|i|i+1 at the smallEnd

142 bool need_to_test = (bc_ptr[BCVars::yvel_bc].hi(0) == ERFBCType::ext_dir_upwind) ? true : false;

160 bool need_to_test = (bc_ptr[BCVars::zvel_bc].lo(0) == ERFBCType::ext_dir_upwind) ? true : false;

178 // note: tilebox xz should be nodal, so i|i-1|i-2 at the bigEnd is analogous to i-1|i|i+1 at the smallEnd

181 bool need_to_test = (bc_ptr[BCVars::zvel_bc].hi(0) == ERFBCType::ext_dir_upwind) ? true : false;

204 bool need_to_test = (bc_ptr[BCVars::xvel_bc].lo(1) == ERFBCType::ext_dir_upwind) ? true : false;

210 tau12(i,j,k) = 0.5 * ( (-(8./3.) * u(i,j-1,k) + 3. * u(i,j,k) - (1./3.) * u(i,j+1,k))*dxInv[1]*mfy

219 // note: tilebox xy should be nodal, so j|j-1|j-2 at the bigEnd is analogous to j-1|j|j+1 at the smallEnd

222 bool need_to_test = (bc_ptr[BCVars::xvel_bc].hi(1) == ERFBCType::ext_dir_upwind) ? true : false;

228 tau12(i,j,k) = 0.5 * ( -(-(8./3.) * u(i,j,k) + 3. * u(i,j-1,k) - (1./3.) * u(i,j-2,k))*dxInv[1]*mfy

240 bool need_to_test = (bc_ptr[BCVars::zvel_bc].lo(1) == ERFBCType::ext_dir_upwind) ? true : false;

258 // note: tilebox yz should be nodal, so j|j-1|j-2 at the bigEnd is analogous to j-1|j|j+1 at the smallEnd

261 bool need_to_test = (bc_ptr[BCVars::zvel_bc].hi(1) == ERFBCType::ext_dir_upwind) ? true : false;

297 // note: tilebox xz should be nodal, so k|k-1|k-2 at the bigEnd is analogous to k-1|k|k+1 at the smallEnd

327 // note: tilebox yz should be nodal, so k|k-1|k-2 at the bigEnd is analogous to k-1|k|k+1 at the smallEnd

amrex::GpuArray< Real, AMREX_SPACEDIM > dxInv

Definition: ERF_InitCustomPertVels_ParticleTests.H:16

ParallelFor(bx, [=] AMREX_GPU_DEVICE(int i, int j, int k) noexcept { const auto prob_lo=geomdata.ProbLo();const auto dx=geomdata.CellSize();const Real x=(prob_lo[0]+(i+0.5) *dx[0])/mf_m(i, j, 0);const Real z=z_cc(i, j, k);Real L=std::sqrt(std::pow((x - x_c)/x_r, 2)+std::pow((z - z_c)/z_r, 2));if(L<=1.0) { Real dT=T_pert *(std::cos(PI *L)+1.0)/2.0;Real Tbar_hse=p_hse(i, j, k)/(R_d *r_hse(i, j, k));Real theta_perturbed=(Tbar_hse+dT) *std::pow(p_0/p_hse(i, j, k), rdOcp);Real theta_0=(Tbar_hse) *std::pow(p_0/p_hse(i, j, k), rdOcp);if(const_rho) { state_pert(i, j, k, RhoTheta_comp)=r_hse(i, j, k) *(theta_perturbed - theta_0);} else { state_pert(i, j, k, Rho_comp)=getRhoThetagivenP(p_hse(i, j, k))/theta_perturbed - r_hse(i, j, k);} } })

Referenced by ERF::advance_dycore(), and erf_make_tau_terms().

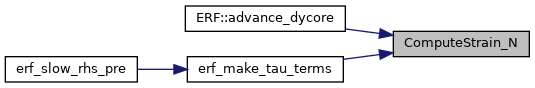

Here is the call graph for this function:

Here is the caller graph for this function: