#include <ERF.H>

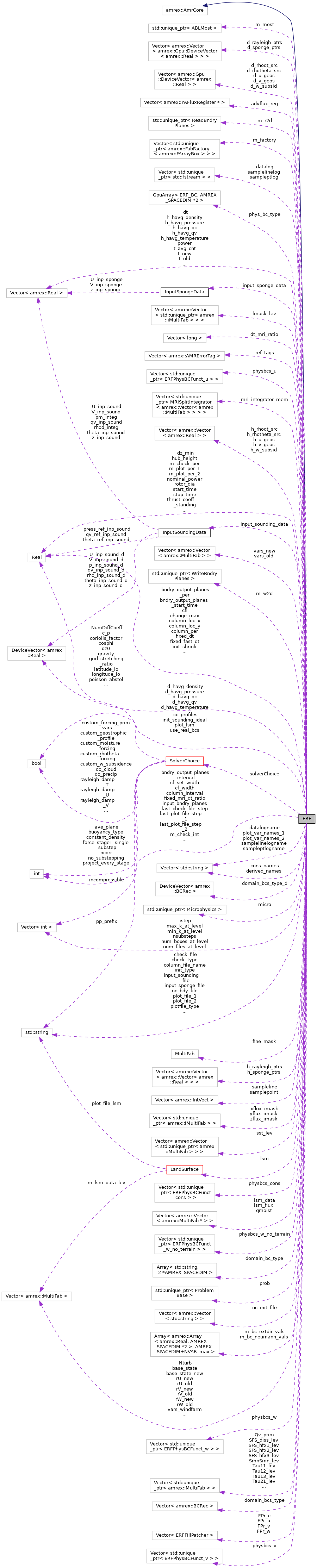

Public Member Functions | |

| ERF () | |

| ~ERF () override | |

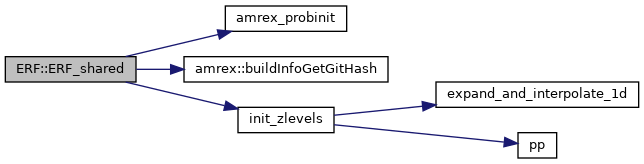

| void | ERF_shared () |

| ERF (ERF &&) noexcept=delete | |

| ERF & | operator= (ERF &&other) noexcept=delete |

| ERF (const ERF &other)=delete | |

| ERF & | operator= (const ERF &other)=delete |

| void | Evolve () |

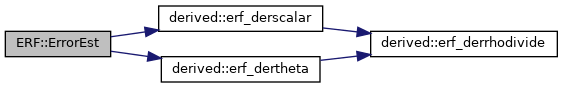

| void | ErrorEst (int lev, amrex::TagBoxArray &tags, amrex::Real time, int ngrow) override |

| void | HurricaneTracker (int lev, amrex::Real time, const amrex::MultiFab &cc_vel, const amrex::Real velmag_threshold, amrex::TagBoxArray *tags=nullptr) |

| bool | FindInitialEye (int lev, const amrex::MultiFab &cc_vel, const amrex::Real velmag_threshold, amrex::Real &eye_x, amrex::Real &eye_y) |

| std::string | MakeVTKFilename (int nstep) |

| std::string | MakeVTKFilename_TrackerCircle (int nstep) |

| std::string | MakeVTKFilename_EyeTracker_xy (int nstep) |

| std::string | MakeFilename_EyeTracker_latlon (int nstep) |

| std::string | MakeFilename_EyeTracker_maxvel (int nstep) |

| std::string | MakeFilename_EyeTracker_minpressure (int nstep) |

| void | WriteVTKPolyline (const std::string &filename, amrex::Vector< std::array< amrex::Real, 2 >> &points_xy) |

| void | WriteLinePlot (const std::string &filename, amrex::Vector< std::array< amrex::Real, 2 >> &points_xy) |

| void | InitData () |

| void | InitData_pre () |

| void | InitData_post () |

| void | Interp2DArrays (int lev, const amrex::BoxArray &my_ba2d, const amrex::DistributionMapping &my_dm) |

| void | WriteMyEBSurface () |

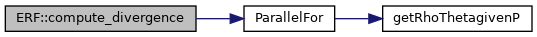

| void | compute_divergence (int lev, amrex::MultiFab &rhs, amrex::Array< amrex::MultiFab const *, AMREX_SPACEDIM > rho0_u_const, amrex::Geometry const &geom_at_lev) |

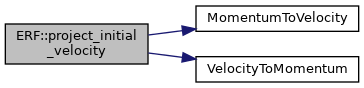

| void | project_initial_velocity (int lev, amrex::Real time, amrex::Real dt) |

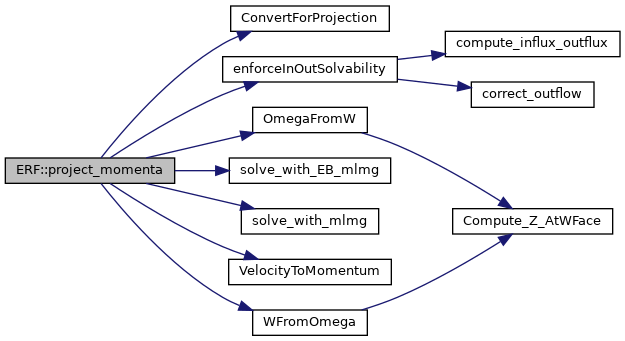

| void | project_momenta (int lev, amrex::Real l_time, amrex::Real l_dt, amrex::Vector< amrex::MultiFab > &vars) |

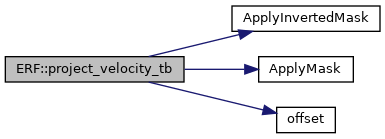

| void | project_velocity_tb (int lev, amrex::Real dt, amrex::Vector< amrex::MultiFab > &vars) |

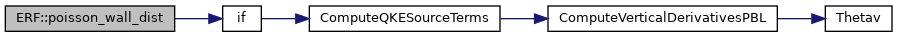

| void | poisson_wall_dist (int lev) |

| void | make_subdomains (const amrex::BoxList &ba, amrex::Vector< amrex::BoxArray > &bins) |

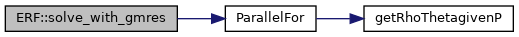

| void | solve_with_gmres (int lev, const amrex::Box &subdomain, amrex::MultiFab &rhs, amrex::MultiFab &p, amrex::Array< amrex::MultiFab, AMREX_SPACEDIM > &fluxes, amrex::MultiFab &ax_sub, amrex::MultiFab &ay_sub, amrex::MultiFab &az_sub, amrex::MultiFab &, amrex::MultiFab &znd_sub) |

| void | ImposeBCsOnPhi (int lev, amrex::MultiFab &phi, const amrex::Box &subdomain) |

| void | init_only (int lev, amrex::Real time) |

| void | restart () |

| void | check_state_for_nans (amrex::MultiFab const &S) |

| void | check_vels_for_nans (amrex::MultiFab const &xvel, amrex::MultiFab const &yvel, amrex::MultiFab const &zvel) |

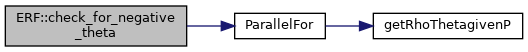

| void | check_for_negative_theta (amrex::MultiFab &S) |

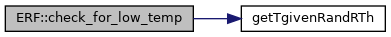

| void | check_for_low_temp (amrex::MultiFab &S) |

| bool | writeNow (const amrex::Real cur_time, const int nstep, const int plot_int, const amrex::Real plot_per, const amrex::Real dt_0, amrex::Real &last_file_time) |

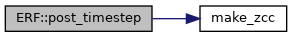

| void | post_timestep (int nstep, amrex::Real time, amrex::Real dt_lev) |

| void | sum_integrated_quantities (amrex::Real time) |

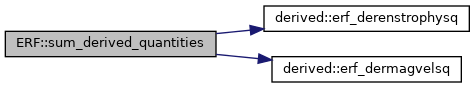

| void | sum_derived_quantities (amrex::Real time) |

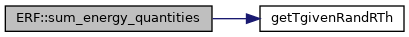

| void | sum_energy_quantities (amrex::Real time) |

| void | write_1D_profiles (amrex::Real time) |

| void | write_1D_profiles_stag (amrex::Real time) |

| amrex::Real | cloud_fraction (amrex::Real time) |

| void | FillBdyCCVels (amrex::Vector< amrex::MultiFab > &mf_cc_vel, int levc=0) |

| void | sample_points (int lev, amrex::Real time, amrex::IntVect cell, amrex::MultiFab &mf) |

| void | sample_lines (int lev, amrex::Real time, amrex::IntVect cell, amrex::MultiFab &mf) |

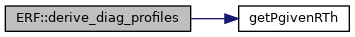

| void | derive_diag_profiles (amrex::Real time, amrex::Gpu::HostVector< amrex::Real > &h_avg_u, amrex::Gpu::HostVector< amrex::Real > &h_avg_v, amrex::Gpu::HostVector< amrex::Real > &h_avg_w, amrex::Gpu::HostVector< amrex::Real > &h_avg_rho, amrex::Gpu::HostVector< amrex::Real > &h_avg_th, amrex::Gpu::HostVector< amrex::Real > &h_avg_ksgs, amrex::Gpu::HostVector< amrex::Real > &h_avg_Kmv, amrex::Gpu::HostVector< amrex::Real > &h_avg_Khv, amrex::Gpu::HostVector< amrex::Real > &h_avg_qv, amrex::Gpu::HostVector< amrex::Real > &h_avg_qc, amrex::Gpu::HostVector< amrex::Real > &h_avg_qr, amrex::Gpu::HostVector< amrex::Real > &h_avg_wqv, amrex::Gpu::HostVector< amrex::Real > &h_avg_wqc, amrex::Gpu::HostVector< amrex::Real > &h_avg_wqr, amrex::Gpu::HostVector< amrex::Real > &h_avg_qi, amrex::Gpu::HostVector< amrex::Real > &h_avg_qs, amrex::Gpu::HostVector< amrex::Real > &h_avg_qg, amrex::Gpu::HostVector< amrex::Real > &h_avg_uu, amrex::Gpu::HostVector< amrex::Real > &h_avg_uv, amrex::Gpu::HostVector< amrex::Real > &h_avg_uw, amrex::Gpu::HostVector< amrex::Real > &h_avg_vv, amrex::Gpu::HostVector< amrex::Real > &h_avg_vw, amrex::Gpu::HostVector< amrex::Real > &h_avg_ww, amrex::Gpu::HostVector< amrex::Real > &h_avg_uth, amrex::Gpu::HostVector< amrex::Real > &h_avg_vth, amrex::Gpu::HostVector< amrex::Real > &h_avg_wth, amrex::Gpu::HostVector< amrex::Real > &h_avg_thth, amrex::Gpu::HostVector< amrex::Real > &h_avg_ku, amrex::Gpu::HostVector< amrex::Real > &h_avg_kv, amrex::Gpu::HostVector< amrex::Real > &h_avg_kw, amrex::Gpu::HostVector< amrex::Real > &h_avg_p, amrex::Gpu::HostVector< amrex::Real > &h_avg_pu, amrex::Gpu::HostVector< amrex::Real > &h_avg_pv, amrex::Gpu::HostVector< amrex::Real > &h_avg_pw, amrex::Gpu::HostVector< amrex::Real > &h_avg_wthv) |

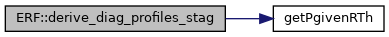

| void | derive_diag_profiles_stag (amrex::Real time, amrex::Gpu::HostVector< amrex::Real > &h_avg_u, amrex::Gpu::HostVector< amrex::Real > &h_avg_v, amrex::Gpu::HostVector< amrex::Real > &h_avg_w, amrex::Gpu::HostVector< amrex::Real > &h_avg_rho, amrex::Gpu::HostVector< amrex::Real > &h_avg_th, amrex::Gpu::HostVector< amrex::Real > &h_avg_ksgs, amrex::Gpu::HostVector< amrex::Real > &h_avg_Kmv, amrex::Gpu::HostVector< amrex::Real > &h_avg_Khv, amrex::Gpu::HostVector< amrex::Real > &h_avg_qv, amrex::Gpu::HostVector< amrex::Real > &h_avg_qc, amrex::Gpu::HostVector< amrex::Real > &h_avg_qr, amrex::Gpu::HostVector< amrex::Real > &h_avg_wqv, amrex::Gpu::HostVector< amrex::Real > &h_avg_wqc, amrex::Gpu::HostVector< amrex::Real > &h_avg_wqr, amrex::Gpu::HostVector< amrex::Real > &h_avg_qi, amrex::Gpu::HostVector< amrex::Real > &h_avg_qs, amrex::Gpu::HostVector< amrex::Real > &h_avg_qg, amrex::Gpu::HostVector< amrex::Real > &h_avg_uu, amrex::Gpu::HostVector< amrex::Real > &h_avg_uv, amrex::Gpu::HostVector< amrex::Real > &h_avg_uw, amrex::Gpu::HostVector< amrex::Real > &h_avg_vv, amrex::Gpu::HostVector< amrex::Real > &h_avg_vw, amrex::Gpu::HostVector< amrex::Real > &h_avg_ww, amrex::Gpu::HostVector< amrex::Real > &h_avg_uth, amrex::Gpu::HostVector< amrex::Real > &h_avg_vth, amrex::Gpu::HostVector< amrex::Real > &h_avg_wth, amrex::Gpu::HostVector< amrex::Real > &h_avg_thth, amrex::Gpu::HostVector< amrex::Real > &h_avg_ku, amrex::Gpu::HostVector< amrex::Real > &h_avg_kv, amrex::Gpu::HostVector< amrex::Real > &h_avg_kw, amrex::Gpu::HostVector< amrex::Real > &h_avg_p, amrex::Gpu::HostVector< amrex::Real > &h_avg_pu, amrex::Gpu::HostVector< amrex::Real > &h_avg_pv, amrex::Gpu::HostVector< amrex::Real > &h_avg_pw, amrex::Gpu::HostVector< amrex::Real > &h_avg_wthv) |

| void | derive_stress_profiles (amrex::Gpu::HostVector< amrex::Real > &h_avg_tau11, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau12, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau13, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau22, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau23, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau33, amrex::Gpu::HostVector< amrex::Real > &h_avg_hfx3, amrex::Gpu::HostVector< amrex::Real > &h_avg_q1fx3, amrex::Gpu::HostVector< amrex::Real > &h_avg_q2fx3, amrex::Gpu::HostVector< amrex::Real > &h_avg_diss) |

| void | derive_stress_profiles_stag (amrex::Gpu::HostVector< amrex::Real > &h_avg_tau11, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau12, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau13, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau22, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau23, amrex::Gpu::HostVector< amrex::Real > &h_avg_tau33, amrex::Gpu::HostVector< amrex::Real > &h_avg_hfx3, amrex::Gpu::HostVector< amrex::Real > &h_avg_q1fx3, amrex::Gpu::HostVector< amrex::Real > &h_avg_q2fx3, amrex::Gpu::HostVector< amrex::Real > &h_avg_diss) |

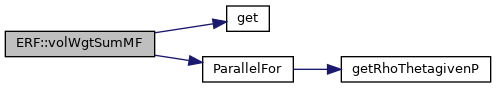

| amrex::Real | volWgtSumMF (int lev, const amrex::MultiFab &mf, int comp, const amrex::MultiFab &dJ, const amrex::MultiFab &mfx, const amrex::MultiFab &mfy, bool finemask, bool local=true) |

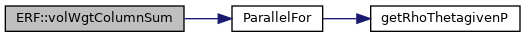

| void | volWgtColumnSum (int lev, const amrex::MultiFab &mf, int comp, amrex::MultiFab &mf_2d, const amrex::MultiFab &dJ) |

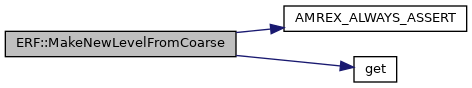

| void | MakeNewLevelFromCoarse (int lev, amrex::Real time, const amrex::BoxArray &ba, const amrex::DistributionMapping &dm) override |

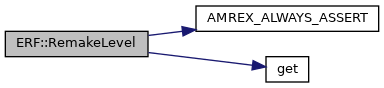

| void | RemakeLevel (int lev, amrex::Real time, const amrex::BoxArray &ba, const amrex::DistributionMapping &dm) override |

| void | ClearLevel (int lev) override |

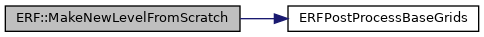

| void | MakeNewLevelFromScratch (int lev, amrex::Real time, const amrex::BoxArray &ba, const amrex::DistributionMapping &dm) override |

| amrex::Real | estTimeStep (int lev, long &dt_fast_ratio) const |

| void | advance_dycore (int level, amrex::Vector< amrex::MultiFab > &state_old, amrex::Vector< amrex::MultiFab > &state_new, amrex::MultiFab &xvel_old, amrex::MultiFab &yvel_old, amrex::MultiFab &zvel_old, amrex::MultiFab &xvel_new, amrex::MultiFab &yvel_new, amrex::MultiFab &zvel_new, amrex::MultiFab &source, amrex::MultiFab &xmom_src, amrex::MultiFab &ymom_src, amrex::MultiFab &zmom_src, amrex::MultiFab &buoyancy, amrex::Geometry fine_geom, amrex::Real dt, amrex::Real time) |

| void | advance_microphysics (int lev, amrex::MultiFab &cons_in, const amrex::Real &dt_advance, const int &iteration, const amrex::Real &time) |

| void | advance_lsm (int lev, amrex::MultiFab &cons_in, amrex::MultiFab &xvel_in, amrex::MultiFab &yvel_in, const amrex::Real &dt_advance) |

| void | advance_radiation (int lev, amrex::MultiFab &cons_in, const amrex::Real &dt_advance) |

| void | build_fine_mask (int lev, amrex::MultiFab &fine_mask) |

| void | MakeHorizontalAverages () |

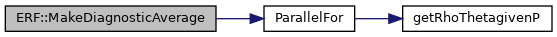

| void | MakeDiagnosticAverage (amrex::Vector< amrex::Real > &h_havg, amrex::MultiFab &S, int n) |

| void | derive_upwp (amrex::Vector< amrex::Real > &h_havg) |

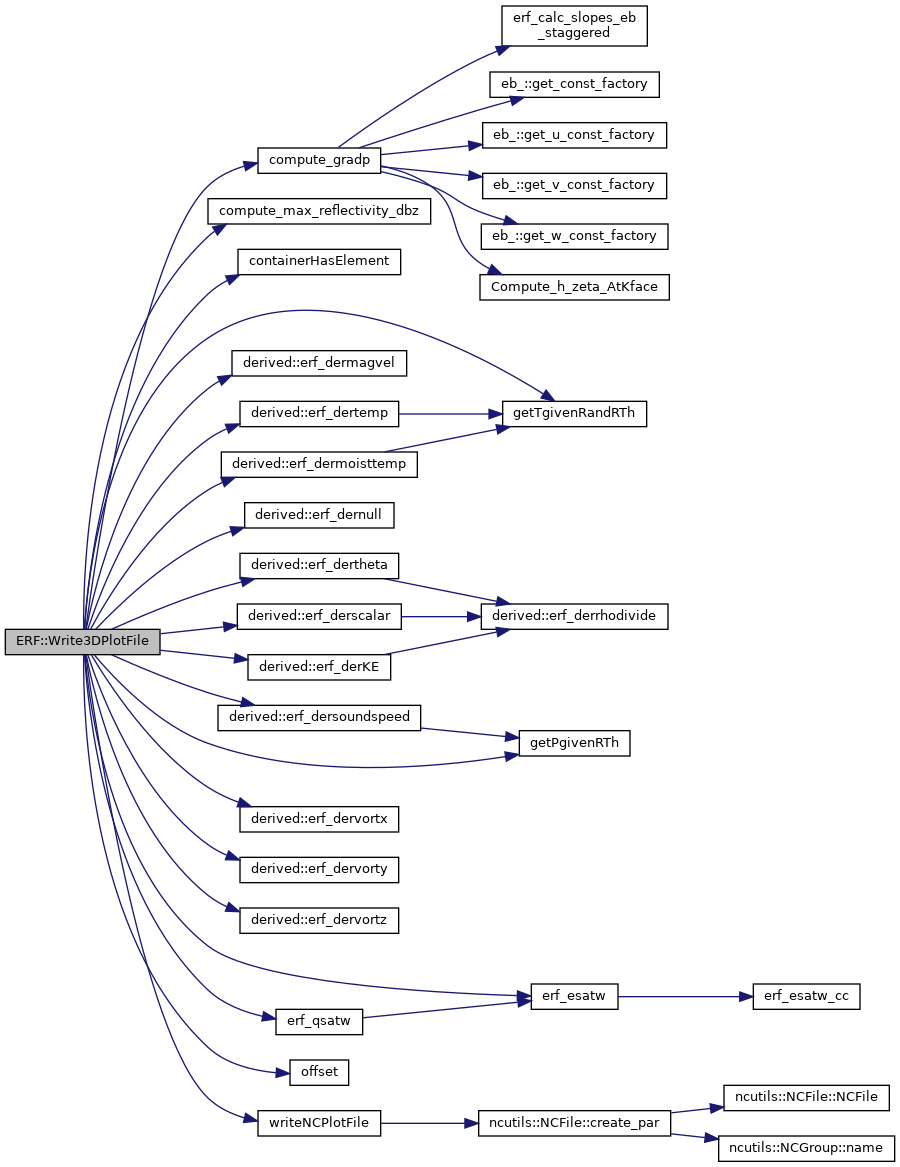

| void | Write3DPlotFile (int which, PlotFileType plotfile_type, amrex::Vector< std::string > plot_var_names) |

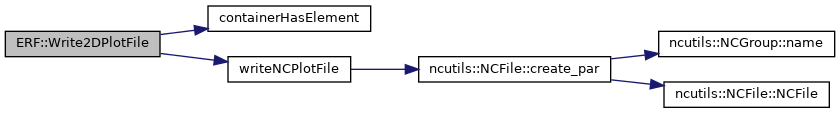

| void | Write2DPlotFile (int which, PlotFileType plotfile_type, amrex::Vector< std::string > plot_var_names) |

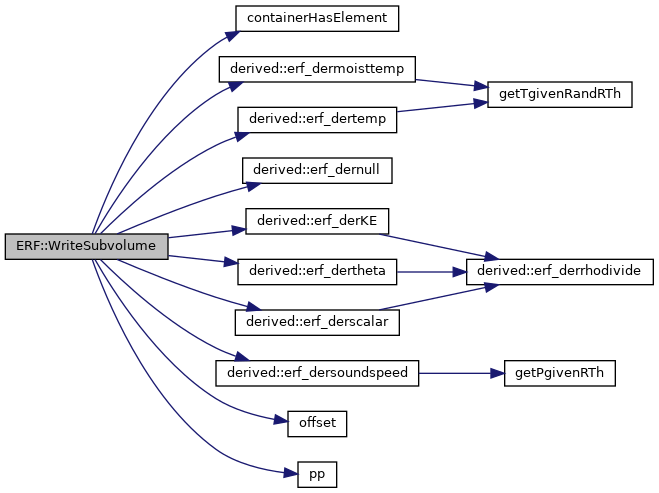

| void | WriteSubvolume (int isub, amrex::Vector< std::string > subvol_var_names) |

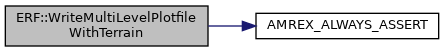

| void | WriteMultiLevelPlotfileWithTerrain (const std::string &plotfilename, int nlevels, const amrex::Vector< const amrex::MultiFab * > &mf, const amrex::Vector< const amrex::MultiFab * > &mf_nd, const amrex::Vector< std::string > &varnames, const amrex::Vector< amrex::Geometry > &my_geom, amrex::Real time, const amrex::Vector< int > &level_steps, const amrex::Vector< amrex::IntVect > &my_ref_ratio, const std::string &versionName="HyperCLaw-V1.1", const std::string &levelPrefix="Level_", const std::string &mfPrefix="Cell", const amrex::Vector< std::string > &extra_dirs=amrex::Vector< std::string >()) const |

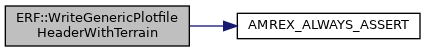

| void | WriteGenericPlotfileHeaderWithTerrain (std::ostream &HeaderFile, int nlevels, const amrex::Vector< amrex::BoxArray > &bArray, const amrex::Vector< std::string > &varnames, const amrex::Vector< amrex::Geometry > &my_geom, amrex::Real time, const amrex::Vector< int > &level_steps, const amrex::Vector< amrex::IntVect > &my_ref_ratio, const std::string &versionName, const std::string &levelPrefix, const std::string &mfPrefix) const |

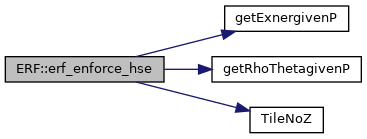

| void | erf_enforce_hse (int lev, amrex::MultiFab &dens, amrex::MultiFab &pres, amrex::MultiFab &pi, amrex::MultiFab &th, amrex::MultiFab &qv, std::unique_ptr< amrex::MultiFab > &z_cc) |

| void | init_from_input_sounding (int lev) |

| void | init_immersed_forcing (int lev) |

| void | input_sponge (int lev) |

| void | init_from_hse (int lev) |

| void | init_thin_body (int lev, const amrex::BoxArray &ba, const amrex::DistributionMapping &dm) |

| void | FillForecastStateMultiFabs (const int lev, const std::string &filename, const std::unique_ptr< amrex::MultiFab > &z_phys_nd, amrex::Vector< amrex::Vector< amrex::MultiFab >> &forecast_state) |

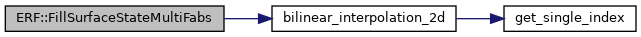

| void | FillSurfaceStateMultiFabs (const int lev, const std::string &filename, amrex::Vector< amrex::MultiFab > &surface_state) |

| void | WeatherDataInterpolation (const int nlevs, const amrex::Real time, amrex::Vector< std::unique_ptr< amrex::MultiFab >> &z_phys_nd, bool regrid_forces_file_read) |

| void | SurfaceDataInterpolation (const int nlevs, const amrex::Real time, amrex::Vector< std::unique_ptr< amrex::MultiFab >> &z_phys_nd, bool regrid_forces_file_read) |

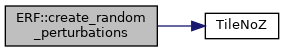

| void | create_random_perturbations (const int lev, amrex::MultiFab &cons_pert, amrex::MultiFab &xvel_pert, amrex::MultiFab &yvel_pert, amrex::MultiFab &zvel_pert) |

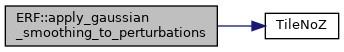

| void | apply_gaussian_smoothing_to_perturbations (const int lev, amrex::MultiFab &cons_pert, amrex::MultiFab &xvel_pert, amrex::MultiFab &yvel_pert, amrex::MultiFab &zvel_pert) |

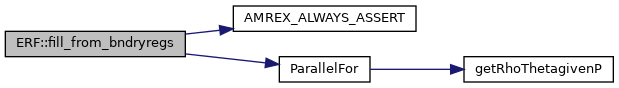

| void | fill_from_bndryregs (const amrex::Vector< amrex::MultiFab * > &mfs, amrex::Real time) |

| void | MakeEBGeometry () |

| void | make_eb_box () |

| void | make_eb_regular () |

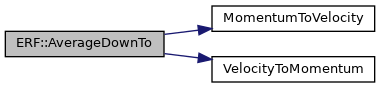

| void | AverageDownTo (int crse_lev, int scomp, int ncomp) |

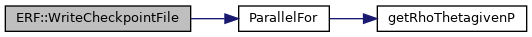

| void | WriteCheckpointFile () const |

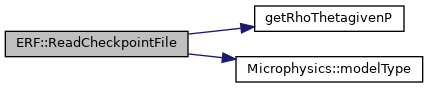

| void | ReadCheckpointFile () |

| void | ReadCheckpointFileSurfaceLayer () |

| void | init_zphys (int lev, amrex::Real elapsed_time) |

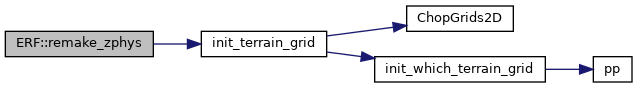

| void | remake_zphys (int lev, std::unique_ptr< amrex::MultiFab > &temp_zphys_nd) |

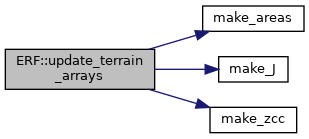

| void | update_terrain_arrays (int lev) |

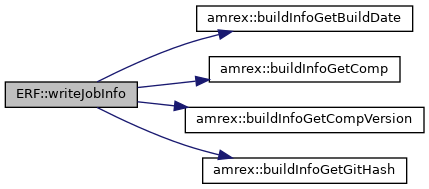

| void | writeJobInfo (const std::string &dir) const |

Static Public Member Functions | |

| static bool | is_it_time_for_action (int nstep, amrex::Real time, amrex::Real dt, int action_interval, amrex::Real action_per) |

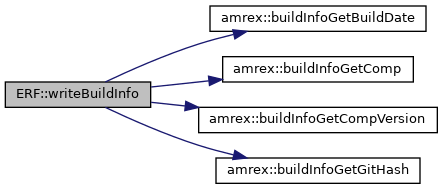

| static void | writeBuildInfo (std::ostream &os) |

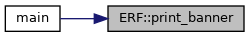

| static void | print_banner (MPI_Comm, std::ostream &) |

| static void | print_usage (MPI_Comm, std::ostream &) |

| static void | print_error (MPI_Comm, const std::string &msg) |

| static void | print_summary (std::ostream &) |

| static void | print_tpls (std::ostream &) |

Public Attributes | |

| amrex::Vector< std::array< amrex::Real, 2 > > | hurricane_track_xy |

| amrex::Vector< std::array< amrex::Real, 2 > > | hurricane_eye_track_xy |

| amrex::Vector< std::array< amrex::Real, 2 > > | hurricane_eye_track_latlon |

| amrex::Vector< std::array< amrex::Real, 2 > > | hurricane_maxvel_vs_time |

| amrex::Vector< std::array< amrex::Real, 2 > > | hurricane_minpressure_vs_time |

| amrex::Vector< std::array< amrex::Real, 2 > > | hurricane_tracker_circle |

| amrex::Vector< amrex::MultiFab > | weather_forecast_data_1 |

| amrex::Vector< amrex::MultiFab > | weather_forecast_data_2 |

| amrex::Vector< amrex::Vector< amrex::MultiFab > > | forecast_state_1 |

| amrex::Vector< amrex::Vector< amrex::MultiFab > > | forecast_state_2 |

| amrex::Vector< amrex::Vector< amrex::MultiFab > > | forecast_state_interp |

| amrex::Vector< amrex::MultiFab > | surface_state_1 |

| amrex::Vector< amrex::MultiFab > | surface_state_2 |

| amrex::Vector< amrex::MultiFab > | surface_state_interp |

| std::string | pp_prefix {"erf"} |

Private Member Functions | |

| void | ReadParameters () |

| void | ParameterSanityChecks () |

| void | AverageDown () |

| void | update_diffusive_arrays (int lev, const amrex::BoxArray &ba, const amrex::DistributionMapping &dm) |

| void | Construct_ERFFillPatchers (int lev) |

| void | Define_ERFFillPatchers (int lev) |

| void | init1DArrays () |

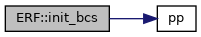

| void | init_bcs () |

| void | init_phys_bcs (bool &rho_read, bool &read_prim_theta) |

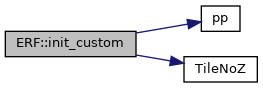

| void | init_custom (int lev) |

| void | init_stuff (int lev, const amrex::BoxArray &ba, const amrex::DistributionMapping &dm, amrex::Vector< amrex::MultiFab > &lev_new, amrex::Vector< amrex::MultiFab > &lev_old, amrex::MultiFab &tmp_base_state, std::unique_ptr< amrex::MultiFab > &tmp_zphys_nd) |

| void | turbPert_update (const int lev, const amrex::Real dt) |

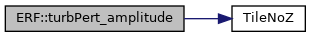

| void | turbPert_amplitude (const int lev) |

| void | initialize_integrator (int lev, amrex::MultiFab &cons_mf, amrex::MultiFab &vel_mf) |

| void | make_physbcs (int lev) |

| void | initializeMicrophysics (const int &) |

| void | FillPatchCrseLevel (int lev, amrex::Real time, const amrex::Vector< amrex::MultiFab * > &mfs_vel, bool cons_only=false) |

| void | FillPatchFineLevel (int lev, amrex::Real time, const amrex::Vector< amrex::MultiFab * > &mfs_vel, const amrex::Vector< amrex::MultiFab * > &mfs_mom, const amrex::MultiFab &old_base_state, const amrex::MultiFab &new_base_state, bool fillset=true, bool cons_only=false) |

| void | FillIntermediatePatch (int lev, amrex::Real time, const amrex::Vector< amrex::MultiFab * > &mfs_vel, const amrex::Vector< amrex::MultiFab * > &mfs_mom, int ng_cons, int ng_vel, bool cons_only, int icomp_cons, int ncomp_cons) |

| void | FillCoarsePatch (int lev, amrex::Real time) |

| void | timeStep (int lev, amrex::Real time, int iteration) |

| void | Advance (int lev, amrex::Real time, amrex::Real dt_lev, int iteration, int ncycle) |

| void | initHSE () |

| Initialize HSE. More... | |

| void | initHSE (int lev) |

| void | initRayleigh () |

| Initialize Rayleigh damping profiles. More... | |

| void | initSponge () |

| Initialize sponge profiles. More... | |

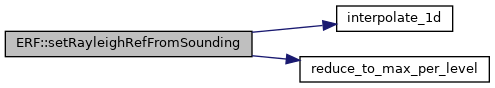

| void | setRayleighRefFromSounding (bool restarting) |

| Set Rayleigh mean profiles from input sounding. More... | |

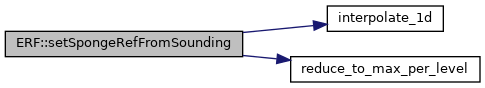

| void | setSpongeRefFromSounding (bool restarting) |

| Set sponge mean profiles from input sounding. More... | |

| void | ComputeDt (int step=-1) |

| std::string | PlotFileName (int lev) const |

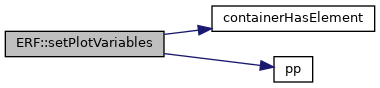

| void | setPlotVariables (const std::string &pp_plot_var_names, amrex::Vector< std::string > &plot_var_names) |

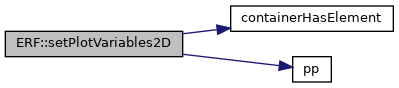

| void | setPlotVariables2D (const std::string &pp_plot_var_names, amrex::Vector< std::string > &plot_var_names) |

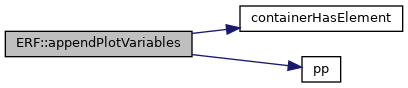

| void | appendPlotVariables (const std::string &pp_plot_var_names, amrex::Vector< std::string > &plot_var_names) |

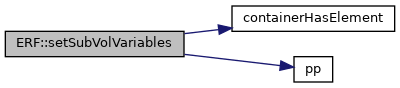

| void | setSubVolVariables (const std::string &pp_subvol_var_names, amrex::Vector< std::string > &subvol_var_names) |

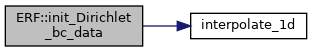

| void | init_Dirichlet_bc_data (const std::string input_file) |

| void | InitializeFromFile () |

| void | InitializeLevelFromData (int lev, const amrex::MultiFab &initial_data) |

| void | post_update (amrex::MultiFab &state_mf, amrex::Real time, const amrex::Geometry &geom) |

| void | fill_rhs (amrex::MultiFab &rhs_mf, const amrex::MultiFab &state_mf, amrex::Real time, const amrex::Geometry &geom) |

| void | init_geo_wind_profile (const std::string input_file, amrex::Vector< amrex::Real > &u_geos, amrex::Gpu::DeviceVector< amrex::Real > &u_geos_d, amrex::Vector< amrex::Real > &v_geos, amrex::Gpu::DeviceVector< amrex::Real > &v_geos_d, const amrex::Geometry &lgeom, const amrex::Vector< amrex::Real > &zlev_stag) |

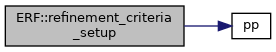

| void | refinement_criteria_setup () |

| AMREX_FORCE_INLINE amrex::YAFluxRegister * | getAdvFluxReg (int lev) |

| AMREX_FORCE_INLINE std::ostream & | DataLog (int i) |

| AMREX_FORCE_INLINE std::ostream & | DerDataLog (int i) |

| AMREX_FORCE_INLINE int | NumDataLogs () noexcept |

| AMREX_FORCE_INLINE int | NumDerDataLogs () noexcept |

| AMREX_FORCE_INLINE std::ostream & | SamplePointLog (int i) |

| AMREX_FORCE_INLINE int | NumSamplePointLogs () noexcept |

| AMREX_FORCE_INLINE std::ostream & | SampleLineLog (int i) |

| AMREX_FORCE_INLINE int | NumSampleLineLogs () noexcept |

| amrex::IntVect & | SamplePoint (int i) |

| AMREX_FORCE_INLINE int | NumSamplePoints () noexcept |

| amrex::IntVect & | SampleLine (int i) |

| AMREX_FORCE_INLINE int | NumSampleLines () noexcept |

| void | setRecordDataInfo (int i, const std::string &filename) |

| void | setRecordDerDataInfo (int i, const std::string &filename) |

| void | setRecordEnergyDataInfo (int i, const std::string &filename) |

| void | setRecordSamplePointInfo (int i, int lev, amrex::IntVect &cell, const std::string &filename) |

| void | setRecordSampleLineInfo (int i, int lev, amrex::IntVect &cell, const std::string &filename) |

| std::string | DataLogName (int i) const noexcept |

| The filename of the ith datalog file. More... | |

| std::string | DerDataLogName (int i) const noexcept |

| std::string | SamplePointLogName (int i) const noexcept |

| The filename of the ith sampleptlog file. More... | |

| std::string | SampleLineLogName (int i) const noexcept |

| The filename of the ith samplelinelog file. More... | |

| eb_ const & | get_eb (int lev) const noexcept |

| amrex::EBFArrayBoxFactory const & | EBFactory (int lev) const noexcept |

Static Private Member Functions | |

| static amrex::Vector< std::string > | PlotFileVarNames (amrex::Vector< std::string > plot_var_names) |

| static void | GotoNextLine (std::istream &is) |

| static AMREX_FORCE_INLINE int | ComputeGhostCells (const SolverChoice &sc) |

| static amrex::Real | getCPUTime () |

| static int | nghost_eb_basic () |

| static int | nghost_eb_volume () |

| static int | nghost_eb_full () |

Private Attributes | |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | lat_m |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | lon_m |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | sinPhi_m |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | cosPhi_m |

| InputSoundingData | input_sounding_data |

| InputSpongeData | input_sponge_data |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | xvel_bc_data |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | yvel_bc_data |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | zvel_bc_data |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | th_bc_data |

| std::unique_ptr< ProblemBase > | prob = nullptr |

| amrex::Vector< int > | num_boxes_at_level |

| amrex::Vector< int > | num_files_at_level |

| amrex::Vector< amrex::Vector< amrex::Box > > | boxes_at_level |

| amrex::Vector< int > | istep |

| amrex::Vector< int > | nsubsteps |

| amrex::Vector< amrex::Real > | t_new |

| amrex::Vector< amrex::Real > | t_old |

| amrex::Vector< amrex::Real > | dt |

| amrex::Vector< long > | dt_mri_ratio |

| amrex::Vector< amrex::Vector< amrex::MultiFab > > | vars_new |

| amrex::Vector< amrex::Vector< amrex::MultiFab > > | vars_old |

| amrex::Vector< amrex::Vector< amrex::MultiFab > > | gradp |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | vel_t_avg |

| amrex::Vector< amrex::Real > | t_avg_cnt |

| amrex::Vector< std::unique_ptr< MRISplitIntegrator< amrex::Vector< amrex::MultiFab > > > > | mri_integrator_mem |

| amrex::Vector< amrex::MultiFab > | pp_inc |

| amrex::Vector< amrex::MultiFab > | lagged_delta_rt |

| amrex::Vector< amrex::MultiFab > | avg_xmom |

| amrex::Vector< amrex::MultiFab > | avg_ymom |

| amrex::Vector< amrex::MultiFab > | avg_zmom |

| amrex::Vector< std::unique_ptr< ERFPhysBCFunct_cons > > | physbcs_cons |

| amrex::Vector< std::unique_ptr< ERFPhysBCFunct_u > > | physbcs_u |

| amrex::Vector< std::unique_ptr< ERFPhysBCFunct_v > > | physbcs_v |

| amrex::Vector< std::unique_ptr< ERFPhysBCFunct_w > > | physbcs_w |

| amrex::Vector< std::unique_ptr< ERFPhysBCFunct_base > > | physbcs_base |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Theta_prim |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Qv_prim |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Qr_prim |

| amrex::Vector< amrex::MultiFab > | rU_old |

| amrex::Vector< amrex::MultiFab > | rU_new |

| amrex::Vector< amrex::MultiFab > | rV_old |

| amrex::Vector< amrex::MultiFab > | rV_new |

| amrex::Vector< amrex::MultiFab > | rW_old |

| amrex::Vector< amrex::MultiFab > | rW_new |

| amrex::Vector< amrex::MultiFab > | zmom_crse_rhs |

| std::unique_ptr< Microphysics > | micro |

| amrex::Vector< amrex::Vector< amrex::MultiFab * > > | qmoist |

| LandSurface | lsm |

| amrex::Vector< std::string > | lsm_data_name |

| amrex::Vector< amrex::Vector< amrex::MultiFab * > > | lsm_data |

| amrex::Vector< std::string > | lsm_flux_name |

| amrex::Vector< amrex::Vector< amrex::MultiFab * > > | lsm_flux |

| amrex::Vector< std::unique_ptr< IRadiation > > | rad |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | qheating_rates |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | rad_fluxes |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | sw_lw_fluxes |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | solar_zenith |

| bool | plot_rad = false |

| int | rad_datalog_int = -1 |

| int | cf_width {0} |

| int | cf_set_width {0} |

| amrex::Vector< ERFFillPatcher > | FPr_c |

| amrex::Vector< ERFFillPatcher > | FPr_u |

| amrex::Vector< ERFFillPatcher > | FPr_v |

| amrex::Vector< ERFFillPatcher > | FPr_w |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::MultiFab > > > | Tau |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::MultiFab > > > | Tau_corr |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | eddyDiffs_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SmnSmn_lev |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::MultiFab > > > | sst_lev |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::MultiFab > > > | tsk_lev |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::iMultiFab > > > | lmask_lev |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::iMultiFab > > > | land_type_lev |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::iMultiFab > > > | soil_type_lev |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::MultiFab > > > | urb_frac_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_hfx1_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_hfx2_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_hfx3_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_diss_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_q1fx1_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_q1fx2_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_q1fx3_lev |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | SFS_q2fx3_lev |

| amrex::Vector< amrex::Vector< amrex::Real > > | zlevels_stag |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | z_phys_nd |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | z_phys_cc |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | detJ_cc |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | ax |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | ay |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | az |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | z_phys_nd_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | z_phys_cc_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | detJ_cc_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | ax_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | ay_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | az_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | z_phys_nd_new |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | detJ_cc_new |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | z_t_rk |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | terrain_blanking |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | walldist |

| amrex::Vector< amrex::Vector< std::unique_ptr< amrex::MultiFab > > > | mapfac |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | fine_mask |

| amrex::Vector< amrex::Vector< amrex::Real > > | stretched_dz_h |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | stretched_dz_d |

| amrex::Vector< amrex::MultiFab > | base_state |

| amrex::Vector< amrex::MultiFab > | base_state_new |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Hwave |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Lwave |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Hwave_onegrid |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | Lwave_onegrid |

| bool | finished_wave = false |

| amrex::Vector< amrex::YAFluxRegister * > | advflux_reg |

| amrex::Vector< amrex::BCRec > | domain_bcs_type |

| amrex::Gpu::DeviceVector< amrex::BCRec > | domain_bcs_type_d |

| amrex::Array< std::string, 2 *AMREX_SPACEDIM > | domain_bc_type |

| amrex::Array< amrex::Array< amrex::Real, AMREX_SPACEDIM *2 >, AMREX_SPACEDIM+NBCVAR_max > | m_bc_extdir_vals |

| amrex::Array< amrex::Array< amrex::Real, AMREX_SPACEDIM *2 >, AMREX_SPACEDIM+NBCVAR_max > | m_bc_neumann_vals |

| amrex::GpuArray< ERF_BC, AMREX_SPACEDIM *2 > | phys_bc_type |

| amrex::Vector< std::unique_ptr< amrex::iMultiFab > > | xflux_imask |

| amrex::Vector< std::unique_ptr< amrex::iMultiFab > > | yflux_imask |

| amrex::Vector< std::unique_ptr< amrex::iMultiFab > > | zflux_imask |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | thin_xforce |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | thin_yforce |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | thin_zforce |

| amrex::Vector< int > | last_subvol_step |

| amrex::Vector< amrex::Real > | last_subvol_time |

| const int | datwidth = 14 |

| const int | datprecision = 6 |

| const int | timeprecision = 13 |

| int | max_step = -1 |

| bool | use_datetime = false |

| const std::string | datetime_format = "%Y-%m-%d %H:%M:%S" |

| std::string | restart_chkfile = "" |

| amrex::Vector< amrex::Real > | fixed_dt |

| amrex::Vector< amrex::Real > | fixed_fast_dt |

| int | regrid_int = -1 |

| bool | regrid_level_0_on_restart = false |

| std::string | plot3d_file_1 {"plt_1_"} |

| std::string | plot3d_file_2 {"plt_2_"} |

| std::string | plot2d_file_1 {"plt2d_1_"} |

| std::string | plot2d_file_2 {"plt2d_2_"} |

| std::string | subvol_file {"subvol"} |

| bool | m_expand_plotvars_to_unif_rr = false |

| int | m_plot3d_int_1 = -1 |

| int | m_plot3d_int_2 = -1 |

| int | m_plot2d_int_1 = -1 |

| int | m_plot2d_int_2 = -1 |

| amrex::Vector< int > | m_subvol_int |

| amrex::Vector< amrex::Real > | m_subvol_per |

| amrex::Real | m_plot3d_per_1 = -1.0 |

| amrex::Real | m_plot3d_per_2 = -1.0 |

| amrex::Real | m_plot2d_per_1 = -1.0 |

| amrex::Real | m_plot2d_per_2 = -1.0 |

| bool | m_plot_face_vels = false |

| bool | plot_lsm = false |

| int | profile_int = -1 |

| bool | destag_profiles = true |

| std::string | check_file {"chk"} |

| int | m_check_int = -1 |

| amrex::Real | m_check_per = -1.0 |

| amrex::Vector< std::string > | subvol3d_var_names |

| amrex::Vector< std::string > | plot3d_var_names_1 |

| amrex::Vector< std::string > | plot3d_var_names_2 |

| amrex::Vector< std::string > | plot2d_var_names_1 |

| amrex::Vector< std::string > | plot2d_var_names_2 |

| const amrex::Vector< std::string > | cons_names |

| const amrex::Vector< std::string > | derived_names |

| const amrex::Vector< std::string > | derived_names_2d |

| const amrex::Vector< std::string > | derived_subvol_names {"soundspeed", "temp", "theta", "KE", "scalar"} |

| TurbulentPerturbation | turbPert |

| int | file_name_digits = 5 |

| bool | use_real_time_in_pltname = false |

| int | real_width {0} |

| bool | real_extrap_w {true} |

| bool | metgrid_debug_quiescent {false} |

| bool | metgrid_debug_isothermal {false} |

| bool | metgrid_debug_dry {false} |

| bool | metgrid_debug_psfc {false} |

| bool | metgrid_debug_msf {false} |

| bool | metgrid_interp_theta {false} |

| bool | metgrid_basic_linear {false} |

| bool | metgrid_use_below_sfc {true} |

| bool | metgrid_use_sfc {true} |

| bool | metgrid_retain_sfc {false} |

| amrex::Real | metgrid_proximity {500.0} |

| int | metgrid_order {2} |

| int | metgrid_force_sfc_k {6} |

| amrex::Vector< amrex::BoxArray > | ba1d |

| amrex::Vector< amrex::BoxArray > | ba2d |

| std::unique_ptr< amrex::MultiFab > | mf_C1H |

| std::unique_ptr< amrex::MultiFab > | mf_C2H |

| std::unique_ptr< amrex::MultiFab > | mf_MUB |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | mf_PSFC |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | rhotheta_src |

| amrex::Vector< std::unique_ptr< amrex::MultiFab > > | rhoqt_src |

| amrex::Vector< amrex::Vector< amrex::Real > > | h_w_subsid |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | d_w_subsid |

| amrex::Vector< amrex::Vector< amrex::Real > > | h_u_geos |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | d_u_geos |

| amrex::Vector< amrex::Vector< amrex::Real > > | h_v_geos |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | d_v_geos |

| amrex::Vector< amrex::Vector< amrex::Vector< amrex::Real > > > | h_rayleigh_ptrs |

| amrex::Vector< amrex::Vector< amrex::Vector< amrex::Real > > > | h_sponge_ptrs |

| amrex::Vector< amrex::Vector< amrex::Real > > | h_sinesq_ptrs |

| amrex::Vector< amrex::Vector< amrex::Real > > | h_sinesq_stag_ptrs |

| amrex::Vector< amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > > | d_rayleigh_ptrs |

| amrex::Vector< amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > > | d_sponge_ptrs |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | d_sinesq_ptrs |

| amrex::Vector< amrex::Gpu::DeviceVector< amrex::Real > > | d_sinesq_stag_ptrs |

| amrex::Vector< amrex::Real > | h_havg_density |

| amrex::Vector< amrex::Real > | h_havg_temperature |

| amrex::Vector< amrex::Real > | h_havg_pressure |

| amrex::Vector< amrex::Real > | h_havg_qv |

| amrex::Vector< amrex::Real > | h_havg_qc |

| amrex::Gpu::DeviceVector< amrex::Real > | d_havg_density |

| amrex::Gpu::DeviceVector< amrex::Real > | d_havg_temperature |

| amrex::Gpu::DeviceVector< amrex::Real > | d_havg_pressure |

| amrex::Gpu::DeviceVector< amrex::Real > | d_havg_qv |

| amrex::Gpu::DeviceVector< amrex::Real > | d_havg_qc |

| std::unique_ptr< WriteBndryPlanes > | m_w2d = nullptr |

| std::unique_ptr< ReadBndryPlanes > | m_r2d = nullptr |

| std::unique_ptr< SurfaceLayer > | m_SurfaceLayer = nullptr |

| amrex::Vector< std::unique_ptr< ForestDrag > > | m_forest_drag |

| amrex::Vector< amrex::Vector< amrex::BoxArray > > | subdomains |

| amrex::Vector< amrex::Real > | dz_min |

| int | line_sampling_interval = -1 |

| int | plane_sampling_interval = -1 |

| amrex::Real | line_sampling_per = -1.0 |

| amrex::Real | plane_sampling_per = -1.0 |

| std::unique_ptr< LineSampler > | line_sampler = nullptr |

| std::unique_ptr< PlaneSampler > | plane_sampler = nullptr |

| amrex::Vector< std::unique_ptr< std::fstream > > | datalog |

| amrex::Vector< std::unique_ptr< std::fstream > > | der_datalog |

| amrex::Vector< std::unique_ptr< std::fstream > > | tot_e_datalog |

| amrex::Vector< std::string > | datalogname |

| amrex::Vector< std::string > | der_datalogname |

| amrex::Vector< std::string > | tot_e_datalogname |

| amrex::Vector< std::unique_ptr< std::fstream > > | sampleptlog |

| amrex::Vector< std::string > | sampleptlogname |

| amrex::Vector< amrex::IntVect > | samplepoint |

| amrex::Vector< std::unique_ptr< std::fstream > > | samplelinelog |

| amrex::Vector< std::string > | samplelinelogname |

| amrex::Vector< amrex::IntVect > | sampleline |

| amrex::Vector< std::unique_ptr< eb_ > > | eb |

Static Private Attributes | |

| static int | last_plot3d_file_step_1 = -1 |

| static int | last_plot3d_file_step_2 = -1 |

| static int | last_plot2d_file_step_1 = -1 |

| static int | last_plot2d_file_step_2 = -1 |

| static int | last_check_file_step = -1 |

| static amrex::Real | last_plot3d_file_time_1 = 0.0 |

| static amrex::Real | last_plot3d_file_time_2 = 0.0 |

| static amrex::Real | last_plot2d_file_time_1 = 0.0 |

| static amrex::Real | last_plot2d_file_time_2 = 0.0 |

| static amrex::Real | last_check_file_time = 0.0 |

| static bool | plot_file_on_restart = true |

| static amrex::Real | start_time = 0.0 |

| static amrex::Real | stop_time = std::numeric_limits<amrex::Real>::max() |

| static amrex::Real | cfl = 0.8 |

| static amrex::Real | sub_cfl = 1.0 |

| static amrex::Real | init_shrink = 1.0 |

| static amrex::Real | change_max = 1.1 |

| static amrex::Real | dt_max_initial = 2.0e100 |

| static amrex::Real | dt_max = 1.0e9 |

| static int | fixed_mri_dt_ratio = 0 |

| static SolverChoice | solverChoice |

| static int | verbose = 0 |

| static int | mg_verbose = 0 |

| static bool | use_fft = false |

| static int | check_for_nans = 0 |

| static int | sum_interval = -1 |

| static int | pert_interval = -1 |

| static amrex::Real | sum_per = -1.0 |

| static PlotFileType | plotfile3d_type_1 = PlotFileType::None |

| static PlotFileType | plotfile3d_type_2 = PlotFileType::None |

| static PlotFileType | plotfile2d_type_1 = PlotFileType::None |

| static PlotFileType | plotfile2d_type_2 = PlotFileType::None |

| static StateInterpType | interpolation_type |

| static std::string | sponge_type |

| static amrex::Vector< amrex::Vector< std::string > > | nc_init_file = {{""}} |

| static amrex::Vector< amrex::Vector< int > > | have_read_nc_init_file = {{0}} |

| static std::string | nc_bdy_file |

| static std::string | nc_low_file |

| static int | output_1d_column = 0 |

| static int | column_interval = -1 |

| static amrex::Real | column_per = -1.0 |

| static amrex::Real | column_loc_x = 0.0 |

| static amrex::Real | column_loc_y = 0.0 |

| static std::string | column_file_name = "column_data.nc" |

| static int | output_bndry_planes = 0 |

| static int | bndry_output_planes_interval = -1 |

| static amrex::Real | bndry_output_planes_per = -1.0 |

| static amrex::Real | bndry_output_planes_start_time = 0.0 |

| static int | input_bndry_planes = 0 |

| static int | ng_dens_hse |

| static int | ng_pres_hse |

| static amrex::Vector< amrex::AMRErrorTag > | ref_tags |

| static amrex::Real | startCPUTime = 0.0 |

| static amrex::Real | previousCPUTimeUsed = 0.0 |

Detailed Description

Constructor & Destructor Documentation

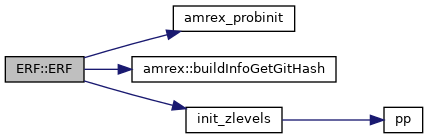

◆ ERF() [1/3]

| ERF::ERF | ( | ) |

◆ ~ERF()

|

overridedefault |

◆ ERF() [2/3]

|

deletenoexcept |

◆ ERF() [3/3]

|

delete |

Member Function Documentation

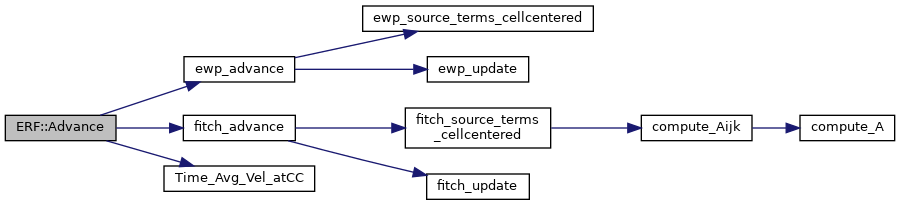

◆ Advance()

|

private |

Function that advances the solution at one level for a single time step – this does some preliminaries then calls erf_advance

- Parameters

-

[in] lev level of refinement (coarsest level is 0) [in] time start time for time advance [in] dt_lev time step for this time advance

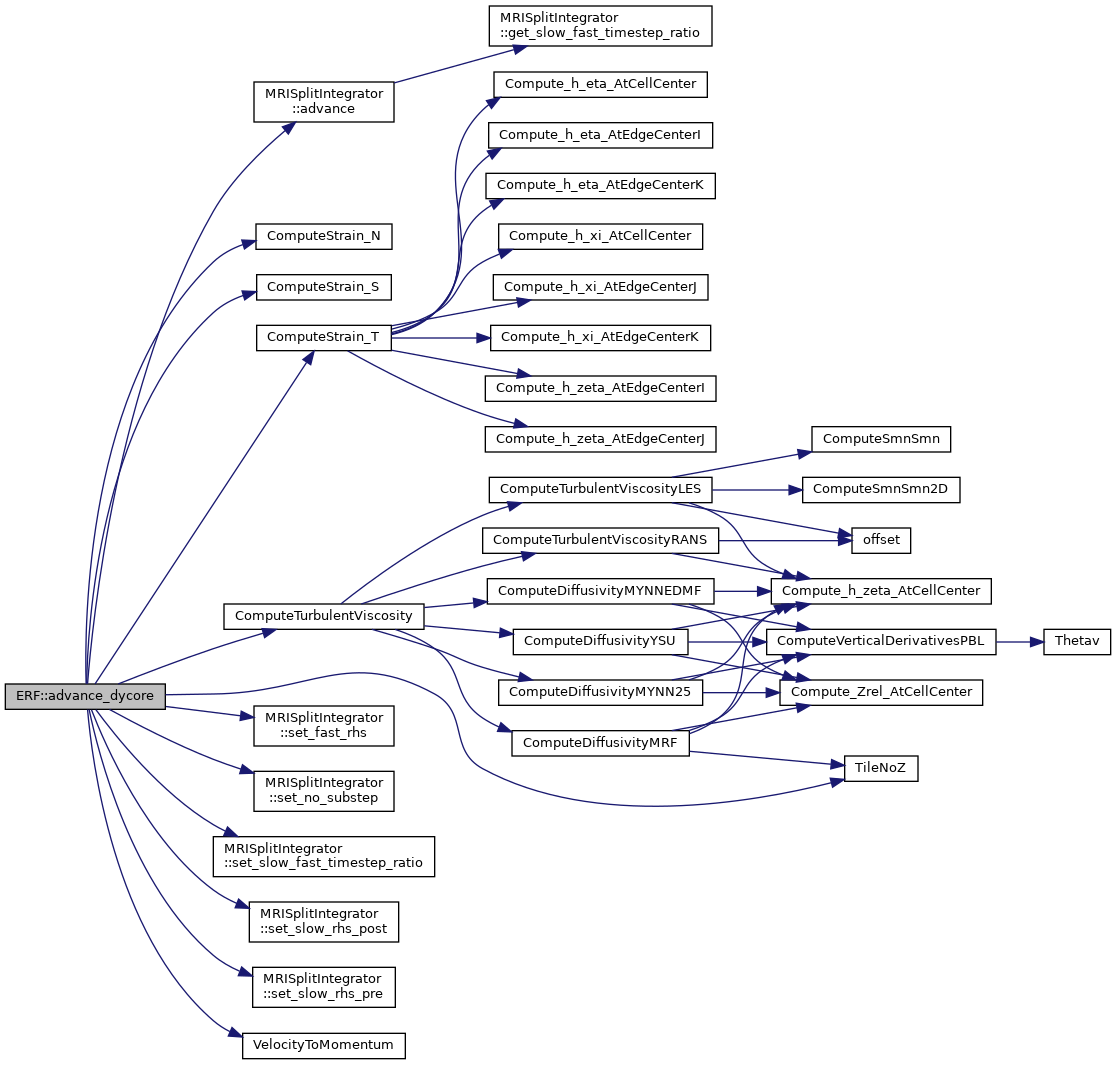

◆ advance_dycore()

| void ERF::advance_dycore | ( | int | level, |

| amrex::Vector< amrex::MultiFab > & | state_old, | ||

| amrex::Vector< amrex::MultiFab > & | state_new, | ||

| amrex::MultiFab & | xvel_old, | ||

| amrex::MultiFab & | yvel_old, | ||

| amrex::MultiFab & | zvel_old, | ||

| amrex::MultiFab & | xvel_new, | ||

| amrex::MultiFab & | yvel_new, | ||

| amrex::MultiFab & | zvel_new, | ||

| amrex::MultiFab & | source, | ||

| amrex::MultiFab & | xmom_src, | ||

| amrex::MultiFab & | ymom_src, | ||

| amrex::MultiFab & | zmom_src, | ||

| amrex::MultiFab & | buoyancy, | ||

| amrex::Geometry | fine_geom, | ||

| amrex::Real | dt, | ||

| amrex::Real | time | ||

| ) |

Function that advances the solution at one level for a single time step – this sets up the multirate time integrator and calls the integrator's advance function

- Parameters

-

[in] level level of refinement (coarsest level is 0) [in] state_old old-time conserved variables [in] state_new new-time conserved variables [in] xvel_old old-time x-component of velocity [in] yvel_old old-time y-component of velocity [in] zvel_old old-time z-component of velocity [in] xvel_new new-time x-component of velocity [in] yvel_new new-time y-component of velocity [in] zvel_new new-time z-component of velocity [in] cc_src source term for conserved variables [in] xmom_src source term for x-momenta [in] ymom_src source term for y-momenta [in] zmom_src source term for z-momenta [in] fine_geom container for geometry information at current level [in] dt_advance time step for this time advance [in] old_time old time for this time advance

◆ advance_lsm()

| void ERF::advance_lsm | ( | int | lev, |

| amrex::MultiFab & | cons_in, | ||

| amrex::MultiFab & | xvel_in, | ||

| amrex::MultiFab & | yvel_in, | ||

| const amrex::Real & | dt_advance | ||

| ) |

◆ advance_microphysics()

| void ERF::advance_microphysics | ( | int | lev, |

| amrex::MultiFab & | cons_in, | ||

| const amrex::Real & | dt_advance, | ||

| const int & | iteration, | ||

| const amrex::Real & | time | ||

| ) |

◆ advance_radiation()

| void ERF::advance_radiation | ( | int | lev, |

| amrex::MultiFab & | cons_in, | ||

| const amrex::Real & | dt_advance | ||

| ) |

◆ appendPlotVariables()

|

private |

◆ apply_gaussian_smoothing_to_perturbations()

| void ERF::apply_gaussian_smoothing_to_perturbations | ( | const int | lev, |

| amrex::MultiFab & | cons_pert, | ||

| amrex::MultiFab & | xvel_pert, | ||

| amrex::MultiFab & | yvel_pert, | ||

| amrex::MultiFab & | zvel_pert | ||

| ) |

◆ AverageDown()

|

private |

◆ AverageDownTo()

| void ERF::AverageDownTo | ( | int | crse_lev, |

| int | scomp, | ||

| int | ncomp | ||

| ) |

◆ build_fine_mask()

| void ERF::build_fine_mask | ( | int | lev, |

| amrex::MultiFab & | fine_mask | ||

| ) |

Helper function for constructing a fine mask, that is, a MultiFab masking coarser data at a lower level by zeroing out covered cells in the fine mask MultiFab we compute.

- Parameters

-

level Fine level index which masks underlying coarser data

◆ check_for_low_temp()

| void ERF::check_for_low_temp | ( | amrex::MultiFab & | S | ) |

◆ check_for_negative_theta()

| void ERF::check_for_negative_theta | ( | amrex::MultiFab & | S | ) |

◆ check_state_for_nans()

| void ERF::check_state_for_nans | ( | amrex::MultiFab const & | S | ) |

◆ check_vels_for_nans()

| void ERF::check_vels_for_nans | ( | amrex::MultiFab const & | xvel, |

| amrex::MultiFab const & | yvel, | ||

| amrex::MultiFab const & | zvel | ||

| ) |

◆ ClearLevel()

|

override |

◆ cloud_fraction()

| Real ERF::cloud_fraction | ( | amrex::Real | time | ) |

◆ compute_divergence()

| void ERF::compute_divergence | ( | int | lev, |

| amrex::MultiFab & | rhs, | ||

| amrex::Array< amrex::MultiFab const *, AMREX_SPACEDIM > | rho0_u_const, | ||

| amrex::Geometry const & | geom_at_lev | ||

| ) |

Project the single-level velocity field to enforce incompressibility Note that the level may or may not be level 0.

◆ ComputeDt()

|

private |

Function that calls estTimeStep for each level

◆ ComputeGhostCells()

|

inlinestaticprivate |

◆ Construct_ERFFillPatchers()

|

private |

◆ create_random_perturbations()

| void ERF::create_random_perturbations | ( | const int | lev, |

| amrex::MultiFab & | cons_pert, | ||

| amrex::MultiFab & | xvel_pert, | ||

| amrex::MultiFab & | yvel_pert, | ||

| amrex::MultiFab & | zvel_pert | ||

| ) |

◆ DataLog()

|

inlineprivate |

◆ DataLogName()

|

inlineprivatenoexcept |

The filename of the ith datalog file.

◆ Define_ERFFillPatchers()

|

private |

◆ DerDataLog()

|

inlineprivate |

◆ DerDataLogName()

|

inlineprivatenoexcept |

◆ derive_diag_profiles()

| void ERF::derive_diag_profiles | ( | amrex::Real | time, |

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_u, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_v, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_w, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_rho, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_th, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_ksgs, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_Kmv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_Khv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qc, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qr, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wqv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wqc, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wqr, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qi, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qs, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qg, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uu, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_vv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_vw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_ww, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_vth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_thth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_ku, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_kv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_kw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_p, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_pu, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_pv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_pw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wthv | ||

| ) |

Computes the profiles for diagnostic quantities.

- Parameters

-

h_avg_u Profile for x-velocity on Host h_avg_v Profile for y-velocity on Host h_avg_w Profile for z-velocity on Host h_avg_rho Profile for density on Host h_avg_th Profile for potential temperature on Host h_avg_ksgs Profile for Kinetic Energy on Host h_avg_uu Profile for x-velocity squared on Host h_avg_uv Profile for x-velocity * y-velocity on Host h_avg_uw Profile for x-velocity * z-velocity on Host h_avg_vv Profile for y-velocity squared on Host h_avg_vw Profile for y-velocity * z-velocity on Host h_avg_ww Profile for z-velocity squared on Host h_avg_uth Profile for x-velocity * potential temperature on Host h_avg_uiuiu Profile for u_i*u_i*u triple product on Host h_avg_uiuiv Profile for u_i*u_i*v triple product on Host h_avg_uiuiw Profile for u_i*u_i*w triple product on Host h_avg_p Profile for pressure perturbation on Host h_avg_pu Profile for pressure perturbation * x-velocity on Host h_avg_pv Profile for pressure perturbation * y-velocity on Host h_avg_pw Profile for pressure perturbation * z-velocity on Host

◆ derive_diag_profiles_stag()

| void ERF::derive_diag_profiles_stag | ( | amrex::Real | time, |

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_u, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_v, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_w, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_rho, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_th, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_ksgs, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_Kmv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_Khv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qc, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qr, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wqv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wqc, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wqr, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qi, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qs, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_qg, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uu, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_vv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_vw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_ww, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_uth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_vth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_thth, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_ku, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_kv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_kw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_p, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_pu, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_pv, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_pw, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_wthv | ||

| ) |

Computes the profiles for diagnostic quantities at staggered heights.

- Parameters

-

h_avg_u Profile for x-velocity on Host h_avg_v Profile for y-velocity on Host h_avg_w Profile for z-velocity on Host h_avg_rho Profile for density on Host h_avg_th Profile for potential temperature on Host h_avg_ksgs Profile for Kinetic Energy on Host h_avg_uu Profile for x-velocity squared on Host h_avg_uv Profile for x-velocity * y-velocity on Host h_avg_uw Profile for x-velocity * z-velocity on Host h_avg_vv Profile for y-velocity squared on Host h_avg_vw Profile for y-velocity * z-velocity on Host h_avg_ww Profile for z-velocity squared on Host h_avg_uth Profile for x-velocity * potential temperature on Host h_avg_uiuiu Profile for u_i*u_i*u triple product on Host h_avg_uiuiv Profile for u_i*u_i*v triple product on Host h_avg_uiuiw Profile for u_i*u_i*w triple product on Host h_avg_p Profile for pressure perturbation on Host h_avg_pu Profile for pressure perturbation * x-velocity on Host h_avg_pv Profile for pressure perturbation * y-velocity on Host h_avg_pw Profile for pressure perturbation * z-velocity on Host

◆ derive_stress_profiles()

| void ERF::derive_stress_profiles | ( | amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau11, |

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau12, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau13, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau22, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau23, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau33, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_hfx3, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_q1fx3, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_q2fx3, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_diss | ||

| ) |

◆ derive_stress_profiles_stag()

| void ERF::derive_stress_profiles_stag | ( | amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau11, |

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau12, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau13, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau22, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau23, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_tau33, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_hfx3, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_q1fx3, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_q2fx3, | ||

| amrex::Gpu::HostVector< amrex::Real > & | h_avg_diss | ||

| ) |

◆ derive_upwp()

| void ERF::derive_upwp | ( | amrex::Vector< amrex::Real > & | h_havg | ) |

◆ EBFactory()

|

inlineprivatenoexcept |

◆ erf_enforce_hse()

| void ERF::erf_enforce_hse | ( | int | lev, |

| amrex::MultiFab & | dens, | ||

| amrex::MultiFab & | pres, | ||

| amrex::MultiFab & | pi, | ||

| amrex::MultiFab & | th, | ||

| amrex::MultiFab & | qv, | ||

| std::unique_ptr< amrex::MultiFab > & | z_cc | ||

| ) |

Enforces hydrostatic equilibrium when using terrain.

- Parameters

-

[in] lev Integer specifying the current level [out] dens MultiFab storing base state density [out] pres MultiFab storing base state pressure [out] pi MultiFab storing base state Exner function [in] z_cc Pointer to MultiFab storing cell centered z-coordinates

◆ ERF_shared()

| void ERF::ERF_shared | ( | ) |

◆ ErrorEst()

|

override |

◆ estTimeStep()

| Real ERF::estTimeStep | ( | int | level, |

| long & | dt_fast_ratio | ||

| ) | const |

Function that calls estTimeStep for each level

- Parameters

-

[in] level level of refinement (coarsest level i 0) [out] dt_fast_ratio ratio of slow to fast time step

◆ Evolve()

| void ERF::Evolve | ( | ) |

Referenced by main().

◆ fill_from_bndryregs()

| void ERF::fill_from_bndryregs | ( | const amrex::Vector< amrex::MultiFab * > & | mfs, |

| amrex::Real | time | ||

| ) |

◆ fill_rhs()

|

private |

◆ FillBdyCCVels()

| void ERF::FillBdyCCVels | ( | amrex::Vector< amrex::MultiFab > & | mf_cc_vel, |

| int | levc = 0 |

||

| ) |

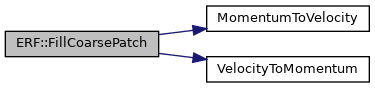

◆ FillCoarsePatch()

|

private |

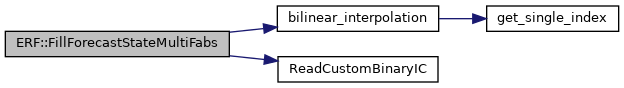

◆ FillForecastStateMultiFabs()

| void ERF::FillForecastStateMultiFabs | ( | const int | lev, |

| const std::string & | filename, | ||

| const std::unique_ptr< amrex::MultiFab > & | z_phys_nd, | ||

| amrex::Vector< amrex::Vector< amrex::MultiFab >> & | forecast_state | ||

| ) |

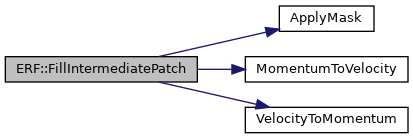

◆ FillIntermediatePatch()

|

private |

◆ FillPatchCrseLevel()

|

private |

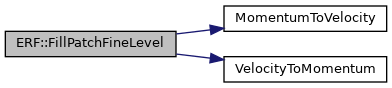

◆ FillPatchFineLevel()

|

private |

◆ FillSurfaceStateMultiFabs()

| void ERF::FillSurfaceStateMultiFabs | ( | const int | lev, |

| const std::string & | filename, | ||

| amrex::Vector< amrex::MultiFab > & | surface_state | ||

| ) |

◆ FindInitialEye()

| bool ERF::FindInitialEye | ( | int | lev, |

| const amrex::MultiFab & | cc_vel, | ||

| const amrex::Real | velmag_threshold, | ||

| amrex::Real & | eye_x, | ||

| amrex::Real & | eye_y | ||

| ) |

◆ get_eb()

|

inlineprivatenoexcept |

◆ getAdvFluxReg()

|

inlineprivate |

◆ getCPUTime()

|

inlinestaticprivate |

◆ GotoNextLine()

|

staticprivate |

◆ HurricaneTracker()

| void ERF::HurricaneTracker | ( | int | lev, |

| amrex::Real | time, | ||

| const amrex::MultiFab & | cc_vel, | ||

| const amrex::Real | velmag_threshold, | ||

| amrex::TagBoxArray * | tags = nullptr |

||

| ) |

◆ ImposeBCsOnPhi()

| void ERF::ImposeBCsOnPhi | ( | int | lev, |

| amrex::MultiFab & | phi, | ||

| const amrex::Box & | subdomain | ||

| ) |

Impose bc's on the pressure that comes out of the solve

◆ init1DArrays()

|

private |

◆ init_bcs()

|

private |

◆ init_custom()

|

private |

Wrapper for custom problem-specific initialization routines that can be defined by the user as they set up a new problem in ERF. This wrapper handles all the overhead of defining the perturbation as well as initializing the random seed if needed.

This wrapper calls a user function to customize initialization on a per-Fab level inside an MFIter loop, so all the MultiFab operations are hidden from the user.

- Parameters

-

lev Integer specifying the current level

◆ init_Dirichlet_bc_data()

|

private |

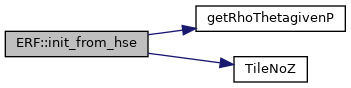

◆ init_from_hse()

| void ERF::init_from_hse | ( | int | lev | ) |

Initialize the background flow to have the calculated HSE density and rho*theta calculated from the HSE pressure. In general, the hydrostatically balanced density and pressure (r_hse and p_hse from base_state) used here may be calculated through a solver path such as:

ERF::initHSE(lev)

- call prob->erf_init_dens_hse(...)

- call Problem::init_isentropic_hse(...), to simultaneously calculate r_hse and p_hse with Newton iteration – assuming constant theta

- save r_hse

- call ERF::enforce_hse(...), calculates p_hse from saved r_hse (redundant, but needed because p_hse is not necessarily calculated by the Problem implementation) and pi_hse and th_hse – note: this pressure does not exactly match the p_hse from before because what is calculated by init_isentropic_hse comes from the EOS whereas what is calculated here comes from the hydro- static equation

- Parameters

-

lev Integer specifying the current level

◆ init_from_input_sounding()

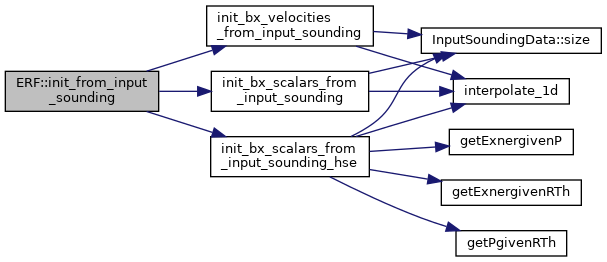

| void ERF::init_from_input_sounding | ( | int | lev | ) |

High level wrapper for initializing scalar and velocity level data from input sounding data.

- Parameters

-

lev Integer specifying the current level

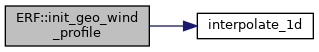

◆ init_geo_wind_profile()

|

private |

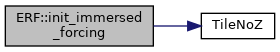

◆ init_immersed_forcing()

| void ERF::init_immersed_forcing | ( | int | lev | ) |

Set velocities in cells that are immersed to be 0 (or a very small number)

- Parameters

-

lev Integer specifying the current level

◆ init_only()

| void ERF::init_only | ( | int | lev, |

| amrex::Real | time | ||

| ) |

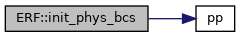

◆ init_phys_bcs()

|

private |

Initializes data structures in the ERF class that specify which boundary conditions we are implementing on each face of the domain.

This function also maps the selected boundary condition types (e.g. Outflow, Inflow, InflowOutflow, Periodic, Dirichlet, ...) to the specific implementation needed for each variable.

Stores this information in both host and device vectors so it is available for GPU kernels.

◆ init_stuff()

|

private |

◆ init_thin_body()

| void ERF::init_thin_body | ( | int | lev, |

| const amrex::BoxArray & | ba, | ||

| const amrex::DistributionMapping & | dm | ||

| ) |

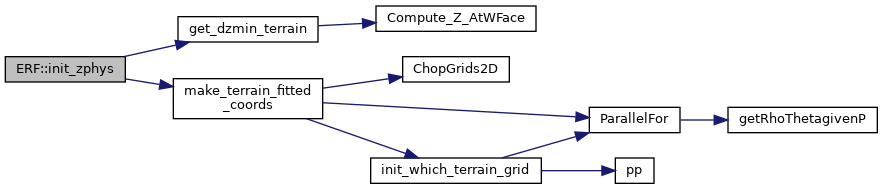

◆ init_zphys()

| void ERF::init_zphys | ( | int | lev, |

| amrex::Real | elapsed_time | ||

| ) |

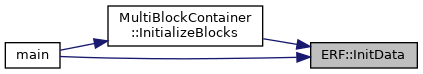

◆ InitData()

| void ERF::InitData | ( | ) |

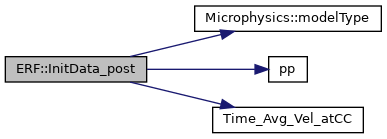

◆ InitData_post()

| void ERF::InitData_post | ( | ) |

◆ InitData_pre()

| void ERF::InitData_pre | ( | ) |

◆ initHSE() [1/2]

|

private |

Initialize HSE.

◆ initHSE() [2/2]

|

private |

Initialize density and pressure base state in hydrostatic equilibrium.

◆ initialize_integrator()

|

private |

◆ InitializeFromFile()

|

private |

◆ InitializeLevelFromData()

|

private |

◆ initializeMicrophysics()

|

private |

- Parameters

-

a_nlevsmax number of AMR levels

◆ initRayleigh()

|

private |

Initialize Rayleigh damping profiles.

Initialization function for host and device vectors used to store averaged quantities when calculating the effects of Rayleigh Damping.

◆ initSponge()

|

private |

Initialize sponge profiles.

Initialization function for host and device vectors used to store the effects of sponge Damping.

◆ input_sponge()

| void ERF::input_sponge | ( | int | lev | ) |

High level wrapper for sponge x and y velocities level data from input sponge data.

- Parameters

-

lev Integer specifying the current level

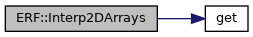

◆ Interp2DArrays()

| void ERF::Interp2DArrays | ( | int | lev, |

| const amrex::BoxArray & | my_ba2d, | ||

| const amrex::DistributionMapping & | my_dm | ||

| ) |

◆ is_it_time_for_action()

|

static |

Helper function which uses the current step number, time, and timestep to determine whether it is time to take an action specified at every interval of timesteps.

- Parameters

-

nstep Timestep number time Current time dtlev Timestep for the current level action_interval Interval in number of timesteps for taking action action_per Interval in simulation time for taking action

◆ make_eb_box()

| void ERF::make_eb_box | ( | ) |

◆ make_eb_regular()

| void ERF::make_eb_regular | ( | ) |

◆ make_physbcs()

|

private |

◆ make_subdomains()

| void ERF::make_subdomains | ( | const amrex::BoxList & | ba, |

| amrex::Vector< amrex::BoxArray > & | bins | ||

| ) |

◆ MakeDiagnosticAverage()

| void ERF::MakeDiagnosticAverage | ( | amrex::Vector< amrex::Real > & | h_havg, |

| amrex::MultiFab & | S, | ||

| int | n | ||

| ) |

◆ MakeEBGeometry()

| void ERF::MakeEBGeometry | ( | ) |

◆ MakeFilename_EyeTracker_latlon()

| std::string ERF::MakeFilename_EyeTracker_latlon | ( | int | nstep | ) |

◆ MakeFilename_EyeTracker_maxvel()

| std::string ERF::MakeFilename_EyeTracker_maxvel | ( | int | nstep | ) |

◆ MakeFilename_EyeTracker_minpressure()

| std::string ERF::MakeFilename_EyeTracker_minpressure | ( | int | nstep | ) |

◆ MakeHorizontalAverages()

| void ERF::MakeHorizontalAverages | ( | ) |

◆ MakeNewLevelFromCoarse()

|

override |

◆ MakeNewLevelFromScratch()

|

override |

◆ MakeVTKFilename()

| std::string ERF::MakeVTKFilename | ( | int | nstep | ) |

◆ MakeVTKFilename_EyeTracker_xy()

| std::string ERF::MakeVTKFilename_EyeTracker_xy | ( | int | nstep | ) |

◆ MakeVTKFilename_TrackerCircle()

| std::string ERF::MakeVTKFilename_TrackerCircle | ( | int | nstep | ) |

◆ nghost_eb_basic()

◆ nghost_eb_full()

◆ nghost_eb_volume()

◆ NumDataLogs()

|

inlineprivatenoexcept |

◆ NumDerDataLogs()

|

inlineprivatenoexcept |

◆ NumSampleLineLogs()

|

inlineprivatenoexcept |

◆ NumSampleLines()

|

inlineprivatenoexcept |

◆ NumSamplePointLogs()

|

inlineprivatenoexcept |

◆ NumSamplePoints()

|

inlineprivatenoexcept |

◆ operator=() [1/2]

◆ operator=() [2/2]

◆ ParameterSanityChecks()

|

private |

◆ PlotFileName()

|

private |

◆ PlotFileVarNames()

|

staticprivate |

◆ poisson_wall_dist()

| void ERF::poisson_wall_dist | ( | int | lev | ) |

Calculate wall distances using the Poisson equation

The zlo boundary is assumed to correspond to the land surface. If there are no boundary walls, then the other use case is to calculate wall distances for immersed boundaries (embedded or thin body).

See Tucker, P. G. (2003). Differential equation-based wall distance computation for DES and RANS. Journal of Computational Physics, 190(1), 229–248. https://doi.org/10.1016/S0021-9991(03)00272-9

◆ post_timestep()

| void ERF::post_timestep | ( | int | nstep, |

| amrex::Real | time, | ||

| amrex::Real | dt_lev | ||

| ) |

◆ post_update()

|

private |

◆ print_banner()

|

static |

Referenced by main().

◆ print_error()

|

static |

◆ print_summary()

|

static |

◆ print_tpls()

|

static |

◆ print_usage()

|

static |

◆ project_initial_velocity()

| void ERF::project_initial_velocity | ( | int | lev, |

| amrex::Real | time, | ||

| amrex::Real | dt | ||

| ) |

Project the single-level velocity field to enforce the anelastic constraint Note that the level may or may not be level 0.

◆ project_momenta()

| void ERF::project_momenta | ( | int | lev, |

| amrex::Real | l_time, | ||

| amrex::Real | l_dt, | ||

| amrex::Vector< amrex::MultiFab > & | vars | ||

| ) |

Project the single-level momenta to enforce the anelastic constraint Note that the level may or may not be level 0.

◆ project_velocity_tb()

| void ERF::project_velocity_tb | ( | int | lev, |

| amrex::Real | dt, | ||

| amrex::Vector< amrex::MultiFab > & | vars | ||

| ) |

Project the single-level velocity field to enforce incompressibility with a thin body

◆ ReadCheckpointFile()

| void ERF::ReadCheckpointFile | ( | ) |

ERF function for reading data from a checkpoint file during restart.

◆ ReadCheckpointFileSurfaceLayer()

| void ERF::ReadCheckpointFileSurfaceLayer | ( | ) |

ERF function for reading additional data for MOST from a checkpoint file during restart.

This is called after the ABLMost object is instantiated.

◆ ReadParameters()

|

private |

◆ refinement_criteria_setup()

|

private |

Function to define the refinement criteria based on user input

◆ remake_zphys()

| void ERF::remake_zphys | ( | int | lev, |

| std::unique_ptr< amrex::MultiFab > & | temp_zphys_nd | ||

| ) |

◆ RemakeLevel()

|

override |

◆ restart()

| void ERF::restart | ( | ) |

◆ sample_lines()

| void ERF::sample_lines | ( | int | lev, |

| amrex::Real | time, | ||

| amrex::IntVect | cell, | ||

| amrex::MultiFab & | mf | ||

| ) |

Utility function for sampling data along a line along the z-dimension at the (x,y) indices specified and writes it to an output file.

- Parameters

-

lev Current level time Current time cell IntVect containing the x,y-dimension indices to sample along z mf MultiFab from which we sample the data

◆ sample_points()

| void ERF::sample_points | ( | int | lev, |

| amrex::Real | time, | ||

| amrex::IntVect | cell, | ||

| amrex::MultiFab & | mf | ||

| ) |

Utility function for sampling MultiFab data at a specified cell index.

- Parameters

-

lev Level for the associated MultiFab data time Current time cell IntVect containing the indexes for the cell where we want to sample mf MultiFab from which we wish to sample data

◆ SampleLine()

|

inlineprivate |

◆ SampleLineLog()

|

inlineprivate |

◆ SampleLineLogName()

|

inlineprivatenoexcept |

The filename of the ith samplelinelog file.

◆ SamplePoint()

|

inlineprivate |

◆ SamplePointLog()

|

inlineprivate |

◆ SamplePointLogName()

|

inlineprivatenoexcept |

The filename of the ith sampleptlog file.

◆ setPlotVariables()

|

private |

◆ setPlotVariables2D()

|

private |

◆ setRayleighRefFromSounding()

|

private |

Set Rayleigh mean profiles from input sounding.

Sets the Rayleigh Damping averaged quantities from an externally supplied input sounding data file.

- Parameters

-

[in] restarting Boolean parameter that indicates whether we are currently restarting from a checkpoint file.

◆ setRecordDataInfo()

|

inlineprivate |

◆ setRecordDerDataInfo()

|

inlineprivate |

◆ setRecordEnergyDataInfo()

|

inlineprivate |

◆ setRecordSampleLineInfo()

|

inlineprivate |

◆ setRecordSamplePointInfo()

|

inlineprivate |

◆ setSpongeRefFromSounding()

|

private |

Set sponge mean profiles from input sounding.

Sets the sponge damping averaged quantities from an externally supplied input sponge data file.

- Parameters

-

[in] restarting Boolean parameter that indicates whether we are currently restarting from a checkpoint file.

◆ setSubVolVariables()

|

private |

◆ solve_with_gmres()

| void ERF::solve_with_gmres | ( | int | lev, |

| const amrex::Box & | subdomain, | ||

| amrex::MultiFab & | rhs, | ||

| amrex::MultiFab & | p, | ||

| amrex::Array< amrex::MultiFab, AMREX_SPACEDIM > & | fluxes, | ||

| amrex::MultiFab & | ax_sub, | ||

| amrex::MultiFab & | ay_sub, | ||

| amrex::MultiFab & | az_sub, | ||

| amrex::MultiFab & | , | ||

| amrex::MultiFab & | znd_sub | ||

| ) |

Solve the Poisson equation using FFT-preconditioned GMRES

◆ sum_derived_quantities()

| void ERF::sum_derived_quantities | ( | amrex::Real | time | ) |

◆ sum_energy_quantities()

| void ERF::sum_energy_quantities | ( | amrex::Real | time | ) |

◆ sum_integrated_quantities()

| void ERF::sum_integrated_quantities | ( | amrex::Real | time | ) |

Computes the integrated quantities on the grid such as the total scalar and total mass quantities. Prints and writes to output file.

- Parameters

-

time Current time

◆ SurfaceDataInterpolation()

| void ERF::SurfaceDataInterpolation | ( | const int | nlevs, |

| const amrex::Real | time, | ||

| amrex::Vector< std::unique_ptr< amrex::MultiFab >> & | z_phys_nd, | ||

| bool | regrid_forces_file_read | ||

| ) |

◆ timeStep()

|

private |

Function that coordinates the evolution across levels – this calls Advance to do the actual advance at this level, then recursively calls itself at finer levels

- Parameters

-

[in] lev level of refinement (coarsest level is 0) [in] time start time for time advance [in] iteration time step counter

◆ turbPert_amplitude()

|

private |

◆ turbPert_update()

|

private |

◆ update_diffusive_arrays()

|

private |

◆ update_terrain_arrays()

| void ERF::update_terrain_arrays | ( | int | lev | ) |

◆ volWgtColumnSum()

| void ERF::volWgtColumnSum | ( | int | lev, |

| const amrex::MultiFab & | mf, | ||

| int | comp, | ||

| amrex::MultiFab & | mf_2d, | ||

| const amrex::MultiFab & | dJ | ||

| ) |

◆ volWgtSumMF()

| Real ERF::volWgtSumMF | ( | int | lev, |

| const amrex::MultiFab & | mf, | ||

| int | comp, | ||

| const amrex::MultiFab & | dJ, | ||

| const amrex::MultiFab & | mfx, | ||

| const amrex::MultiFab & | mfy, | ||

| bool | finemask, | ||

| bool | local = true |

||

| ) |

Utility function for computing a volume weighted sum of MultiFab data for a single component

- Parameters

-

lev Current level mf_to_be_summed : MultiFab on which we do the volume weighted sum dJ : volume weighting due to metric terms mfmx : map factor in x-direction at cell centers mfmy : map factor in y-direction at cell centers comp : Index of the component we want to sum finemask : If a finer level is available, determines whether we mask fine data local : Boolean sets whether or not to reduce the sum over the domain (false) or compute sums local to each MPI rank (true)

◆ WeatherDataInterpolation()

| void ERF::WeatherDataInterpolation | ( | const int | nlevs, |

| const amrex::Real | time, | ||

| amrex::Vector< std::unique_ptr< amrex::MultiFab >> & | z_phys_nd, | ||

| bool | regrid_forces_file_read | ||

| ) |

◆ Write2DPlotFile()

| void ERF::Write2DPlotFile | ( | int | which, |

| PlotFileType | plotfile_type, | ||

| amrex::Vector< std::string > | plot_var_names | ||

| ) |

◆ Write3DPlotFile()

| void ERF::Write3DPlotFile | ( | int | which, |

| PlotFileType | plotfile_type, | ||

| amrex::Vector< std::string > | plot_var_names | ||

| ) |

◆ write_1D_profiles()

| void ERF::write_1D_profiles | ( | amrex::Real | time | ) |

Writes 1-dimensional averaged quantities as profiles to output log files at the given time.

- Parameters

-

time Current time

◆ write_1D_profiles_stag()

| void ERF::write_1D_profiles_stag | ( | amrex::Real | time | ) |

Writes 1-dimensional averaged quantities as profiles to output log files at the given time.

Quantities are output at their native grid locations. Therefore, w and associated flux quantities <(•)'w'>, tau13, and tau23 (where '•' includes u, v, p, theta, ...) will be output at staggered heights (i.e., coincident with z faces) rather than cell-center heights to avoid performing additional averaging. Unstaggered (i.e., cell-centered) quantities are output alongside staggered quantities at the lower cell faces in the log file; these quantities will have a zero value at the big end, corresponding to k=Nz+1.

The structure of file should follow ERF_Write1DProfiles.cpp

- Parameters

-

time Current time

◆ writeBuildInfo()

|

static |

Referenced by main().

◆ WriteCheckpointFile()

| void ERF::WriteCheckpointFile | ( | ) | const |

ERF function for writing a checkpoint file.

◆ WriteGenericPlotfileHeaderWithTerrain()

| void ERF::WriteGenericPlotfileHeaderWithTerrain | ( | std::ostream & | HeaderFile, |

| int | nlevels, | ||

| const amrex::Vector< amrex::BoxArray > & | bArray, | ||

| const amrex::Vector< std::string > & | varnames, | ||

| const amrex::Vector< amrex::Geometry > & | my_geom, | ||

| amrex::Real | time, | ||

| const amrex::Vector< int > & | level_steps, | ||

| const amrex::Vector< amrex::IntVect > & | my_ref_ratio, | ||

| const std::string & | versionName, | ||

| const std::string & | levelPrefix, | ||

| const std::string & | mfPrefix | ||

| ) | const |

◆ writeJobInfo()

| void ERF::writeJobInfo | ( | const std::string & | dir | ) | const |

◆ WriteLinePlot()

| void ERF::WriteLinePlot | ( | const std::string & | filename, |

| amrex::Vector< std::array< amrex::Real, 2 >> & | points_xy | ||

| ) |

◆ WriteMultiLevelPlotfileWithTerrain()

| void ERF::WriteMultiLevelPlotfileWithTerrain | ( | const std::string & | plotfilename, |

| int | nlevels, | ||

| const amrex::Vector< const amrex::MultiFab * > & | mf, | ||

| const amrex::Vector< const amrex::MultiFab * > & | mf_nd, | ||

| const amrex::Vector< std::string > & | varnames, | ||

| const amrex::Vector< amrex::Geometry > & | my_geom, | ||

| amrex::Real | time, | ||

| const amrex::Vector< int > & | level_steps, | ||

| const amrex::Vector< amrex::IntVect > & | my_ref_ratio, | ||

| const std::string & | versionName = "HyperCLaw-V1.1", |

||

| const std::string & | levelPrefix = "Level_", |

||

| const std::string & | mfPrefix = "Cell", |

||

| const amrex::Vector< std::string > & | extra_dirs = amrex::Vector<std::string>() |

||

| ) | const |

◆ WriteMyEBSurface()

| void ERF::WriteMyEBSurface | ( | ) |

◆ writeNow()

| bool ERF::writeNow | ( | const amrex::Real | cur_time, |

| const int | nstep, | ||

| const int | plot_int, | ||

| const amrex::Real | plot_per, | ||

| const amrex::Real | dt_0, | ||

| amrex::Real & | last_file_time | ||

| ) |

◆ WriteSubvolume()

| void ERF::WriteSubvolume | ( | int | isub, |

| amrex::Vector< std::string > | subvol_var_names | ||

| ) |

◆ WriteVTKPolyline()

| void ERF::WriteVTKPolyline | ( | const std::string & | filename, |

| amrex::Vector< std::array< amrex::Real, 2 >> & | points_xy | ||

| ) |

Member Data Documentation

◆ advflux_reg

|

private |

Referenced by getAdvFluxReg().

◆ avg_xmom

|

private |

◆ avg_ymom

|

private |

◆ avg_zmom

|

private |

◆ ax

|

private |

◆ ax_src

|

private |

◆ ay

|

private |

◆ ay_src

|

private |

◆ az

|

private |

◆ az_src

|

private |

◆ ba1d

|

private |

◆ ba2d

|

private |

◆ base_state

|

private |

◆ base_state_new

|

private |

◆ bndry_output_planes_interval

|

staticprivate |